EDIT #3 - The snapshot vote to ratify these results is live - thank you to everyone who helped us get to this point, and for your patience during the process. Please vote here: Snapshot

EDIT #2 - We have recently posted the second iteration of revised results (and hopefully the last) ![]() You can see these final results here and more information on what additional Sybil detection was done in the comment below. Thank you to all in the community who helped to review and flag discrepancies in the results, helping us prevent more Sybils. We plan to move forward with the ratification of these results via a Snapshot vote in the coming days.

You can see these final results here and more information on what additional Sybil detection was done in the comment below. Thank you to all in the community who helped to review and flag discrepancies in the results, helping us prevent more Sybils. We plan to move forward with the ratification of these results via a Snapshot vote in the coming days.

EDIT - We have posted revised results with more robust Sybil detection here - you can see the full write up towards the bottom of this thread (direct link)

TLDR: Beta Round final results are live here! We propose ~5 days for discussion and review followed by a 5-day snapshot to ratify, before processing final payouts.

Background

First of all, I’d like to thank @ale.k @koday @umarkhaneth @M0nkeyFl0wer @jon-spark-eco @nategosselin @gravityblast (and many more from the PGF, Allo, and other workstreams) for all the work that went into running this round and helping to get these final QF calculations.

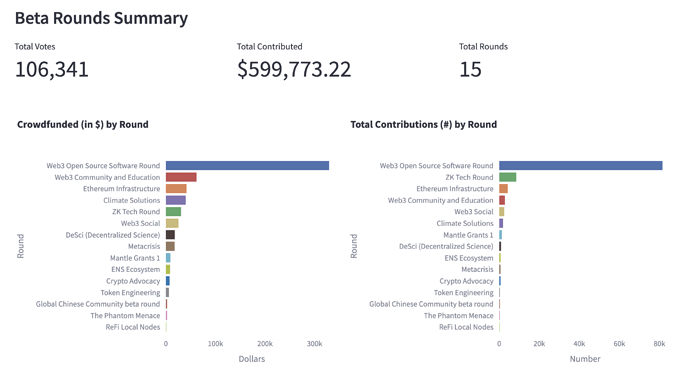

This Beta was the second Gitcoin Grants Program QF round on Grants Stack. It ran from April 25th to May 9th and unlike the Alpha Round, it was open for anyone to participate (as long as projects met the eligibility criteria* of one of the Core Rounds). $1.25 Million in matching was available between 5 distinct rounds (and correspondingly 5 smart contracts). There were also 10 externally operated Featured Rounds, however, this post only pertains to the Core Rounds operated by Gitcoin. These rounds and matching pools are broken down below:

- Matching pool: 350,000 DAI

- Matching cap: 4%

- Matching pool: 350,000 DAI

- Matching cap: 10%

- Matching pool: 200,000 DAI

- Matching cap: 6%

- Matching pool: 200,000 DAI

- Matching cap: 10%

- Matching pool: 150,000 DAI

- Matching cap: 10%

*To view and/or weigh in on the discussion of platform and core-round specific eligibility criteria, see the following posts in the Gov Forum:

Results & Ratification

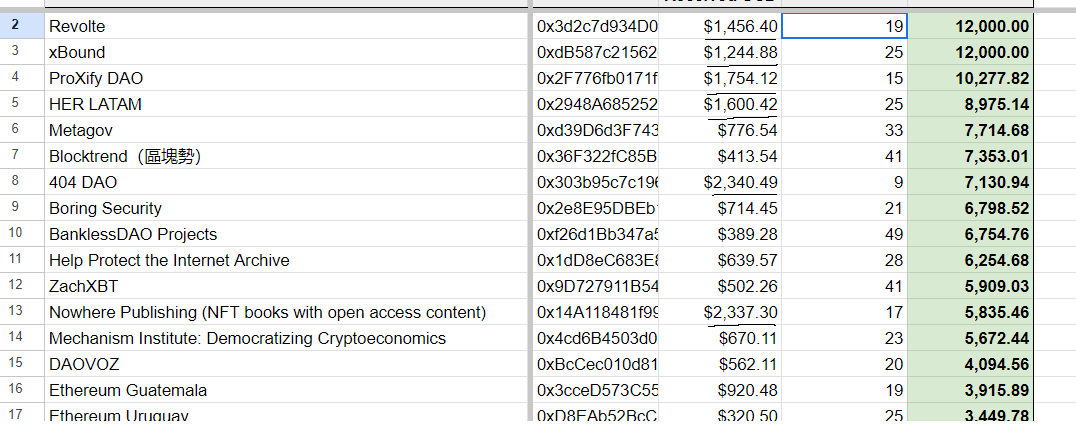

The full list of final results & payout amounts can be found here. Below we’ll cover how these results were calculated and other decisions that were made.

We ask our Community Stewards to ratify the Beta Round payout amounts as being correct, fair, and abiding by community norms, including the implementation of Passport scoring as well as Sybil/fraud judgments and squelching made by the Public Goods Funding workstream.

If stewards and the community approve after this discussion, we would like to suggest voting on Snapshot to run from Friday, June 2nd, to Wednesday, June 7th. If the vote passes, the multisig signers can then approve a transaction to fund the round contracts, the results will be finalized on-chain, and payouts will be processed shortly after.

Options to vote on:

1. Ratify the round results

You ratify the results as reported by the Public Goods Funding workstream and request the keyholders of the community multisig to payout funds according to the Beta Round final payout amounts.

2. Request further deliberation

You do not ratify the results and request keyholders of the community multi-sig wallet to delay payment until further notice.

Round and Results Calculation Details

***Note: The final results you see here show data that has been calculated after imposing various eligibility controls and donor squelching (described below). The numbers may not match exactly what you see on-chain or on the platform’s front end. For example, donations from users without a sufficient Passport score or donations under the minimum will not be counted in the aggregate “Total Received USD” or “Contributions” column for your grant.

To summarize:

- The Gitcoin Program Beta Round was conducted on Grants Stack from April 25th to May 9th, 2023.

- It consisted of 5 Core Rounds with their own matching pools: Web3 Open Source Software, Climate Solutions, Web3 Community & Education, Ethereum Infrastructure, and ZK Tech.

- There were 470 grants split between the rounds and approved based on round-specific and general platform eligibility requirements.

- A total of ~$600k was donated across the 5 core rounds and 10 featured rounds (see this Dashboard for detailed stats).

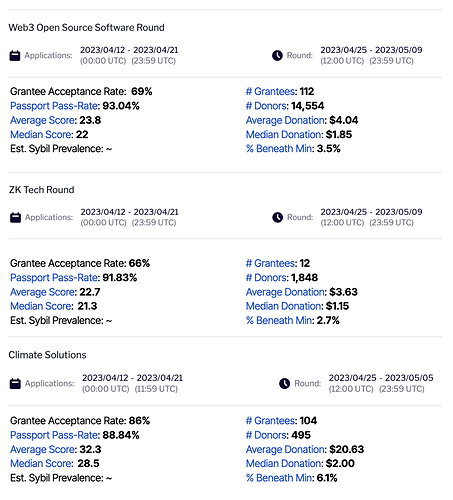

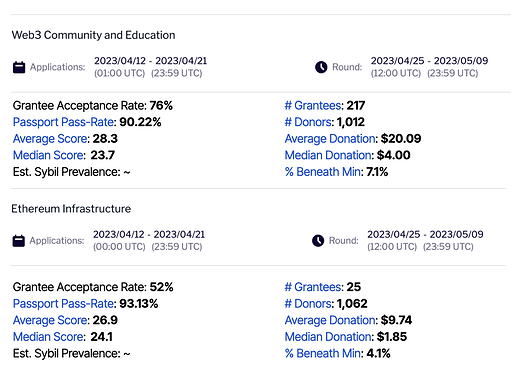

Key Metrics by Round

Matching Calculation Approach

The results shared above stray from the raw output of the QF algorithm due to a few variables:

- Donors must have a sufficient passport score (threshold for all rounds = 15)

- $1 donation minimum

- Removal of Sybil attackers and bots

- Round-specific percentage matching caps imposed

Passport scores

The Gitcoin Passport minimum score threshold was set to 15 for all Beta rounds, which was notably lower than the previous Alpha Round. As a result, we saw a higher passport pass rate (shared in the data above). A donor’s highest score at any point during the 2 week round was used for all of their donations, even if they donated and later raised their score. Only donations from users with a passport and sufficient score were counted for matching calculations.

Donation minimum

Past cGrants rounds used a $1 donation minimum, while the Alpha Round cut off a variable bottom percentile of donations after manual analysis. Now that the Allo Protocol has a minimum donation feature built into Round Manager, we decided to go back to using a predetermined and public minimum value of $1.

An interesting result of using a USD-denominated minimum was that a 1 DAI donation was not always sufficient, depending on the conversion rate of DAI at the time of the transaction. There were thousands of 1 DAI donations, and while they typically ranged from $0.998 to $1.002, over half fell below the $1 USD threshold and were not initially counted towards matching. It was decided that a user should reasonably expect a 1 DAI donation to count, and to ensure their vote was not decided by arbitrary small price fluctuations, we used $0.98 as the minimum for the final calculations.

Sybil attacks and suspected bots

As with most QF Rounds, we saw some sophisticated Sybil attack patterns which were not stopped by Passport (yet!). After on-chain data analysis and a manual sampling process, donations from addresses that were associated with these types of behaviors were excluded for the purposes of matching calculations. This includes things like:

- Suspected bot activity based on specific transaction patterns and similarities

- Flagging known Sybil networks/addresses from prior rounds

- Enhanced analysis of Passport stamps and other data to flag evidence of abuse between different wallets

- Self-donations from grantee wallets

Matching caps

Matching caps were introduced many rounds ago on cGrants, where there is a maximum percent of the total matching pool any one grant can capture. Once they hit this cap, they will not earn more, and any excess is redistributed to all other grants proportionally. Round caps were:

- 4% for Web3 OSS

- 10% for Climate

- 6% for Web3 Community & Education

- 10% for Eth Infra

- 10% for ZK Tech

These amounts were selected based on the number of grants expected to be in each round and the size of each matching pool, using prior rounds to help advise. We are always looking for community feedback on matching caps for future rounds!

Please note: In an effort to create a transparent minimum viable product of QF formula, simple quadratic voting has been deployed here. In the future, we hope to offer pairwise matching and other options for possible customizations that will be set and published on-chain at the time of round creation.

Future Analysis & Takeaways

DAO contributors will soon share detailed analyses, statistics, and takeaways from the Beta Round in addition to these results. You will find these posts in the governance forum in the coming weeks. For takeaways from the Alpha Round, see here for data, operations, and Passport retros.

Again, we thank everyone for your patience and for participating in the Beta Round. We’re excited for the next iteration of the Gitcoin Program and to begin supporting many other organizations as they run their own rounds.

For questions, comments, or concerns on any of the above, please comment below or join the Gitcoin Discord. Thanks for reading!