The purpose of this post-mortem is to document the experience with Gitcoin Passport during Gitcoin Allo Alpha Rounds. The goal is to understand what went well, wrong, and what can be improved to prevent similar issues from happening in the future.

Summary of the Experience

During the Gitcoin Allo Alpha Rounds, donors were unable to verify their unique identity for most of the program due to infrastructure instability with Gitcoin Passport. This resulted in a significant number of donors being unable to meaningfully participate in the program because they would not be able to verify themselves for matching funding for their donations.

When the infrastructure was accessible, we heard that Stamps were reset, and Passports often needed to be “re-anchored.” In the event users were able to verify stamps, they could then have their Passports scored to determine eligibility.

Background

Gitcoin Allo Alpha Rounds were pilot grant programs hosted by GitcoinDAO on the Gitcoin Allo Protocol, which is a new and more decentralized version of the grants product. The program was aimed at supporting innovative projects in the blockchain, open-source, and regen space, with a focus on increasing the impact and reach of such projects.

To ensure the fairness and transparency of the program, Gitcoin Grants operates on a democratic matching funding mechanism called Quadratic Funding. Based on previous experience, the grants programs may be manipulated by participants who create multiple accounts/wallets to influence the matching funding results. To address this issue, Gitcoin Passport was introduced as a decentralized identity verification tool that allows participants to prove their unique humanity through various identity providers.

During the round, Gitcoin Passport worked by linking a participant’s digital identity to their Ethereum wallet address on the Ceramic Network, and then verifying their identity through trusted identity providers. This ensured that each participant can only have one account, and that their vote and donation are tied to their unique identity.

Timeline of Experience

- 2023/01/18: Users reported issues with Ceramic Network, with a CORS policy blocking requests and 504 Gateway timeout error. The team attempted to debug, restarted IPFS, and waited for feedback from Ceramic.

- 2023/01/19: Users experienced issues with Passports and verification process. The team advised users to be patient and were compiling an issues log. Kyle reported a few steps in place to resolve the issue.

- 2023/01/20: A user reported difficulty with Twitter, Discord, and ENS verification stamps. Kyle asked for an update and Gerald mentioned they were close to a fix.

- 2023/01/21: Ceramic network became unhealthy causing an outage and issues with the Gitcoin Passport App. Gerald worked on fixing the problem and deployed a fix for the error pop-up.

- 2023/01/22: The team deployed a fix for the Ceramic Network Error and moved the Passport App to AWS Amplify in an effort to resolve the server side errors we were seeing. Some web2 stamps didn’t work due to the domain, but they would be fixed with a domain change. Gerald identified a bug in the reset flow causing an infinite loop error.

- 2023/01/23: The issue of chunks not loading persisted, with a high rate of 4xx errors in Amplify. Gerald sought assistance from Kammerdiener for the issue, which only affected the Passport App.

- 2023/01/24: Two separate issues were discussed regarding the Ceramic Network Error, one for the Grant Explorer page and one for the Gitcoin passport page. The team tried to improve the situation by increasing the number of worker threads.

- 2023/01/25: Some users reported slow performance and issues with loading their passport. Gitcoin pushed an upgrade for the Ceramic node. Another user reported that stamps could not be verified with “too many open files” error.

- 2023/01/26: The team attempted to resolve issues with Passport Stamps and timeouts by restarting IPFS and Ceramic nodes and considered a caching layer.

- 2023/01/27: Passport connections continued to time out on the Gitcoin platform. Gerald restarted the nodes and considered scripting a restart every 3 hours. Kammerdiener suggested using AWS commands for a redeploy. Product and engineering teams start considering and exploring a hotfix to the issues by migrating Passport data over from Ceramic to a centralized “cache”database.

- 2023/01/28: Passport app still not working, despite servers being restarted every 2 hours. The team attempted to resolve the issue by restarting nodes but with no success.

- 2023/01/29: Many errors are reported by the Passport app and in Datadog. The team tried restarting the Ceramic node but with no success. The idea of using a second Ceramic node is discussed, but it will result in a delay as the node has to sync. Good progress has been made on cache, but the challenge remains in migrating to a new data strategy. Passport app still not working for some team members.

- 2023/01/30: Team works on fixing Passport app and Ceramic Network Error. They try various methods including changing ENS stamps. Joel from Ceramic suggests turning off pub-sub could solve the issue. New build deployed and team monitors logs, but couldn’t find any issues. Some team members succeed in removing/adding stamps, while others face trouble. The team tweets they’re working on the issue, but node is not functioning properly again by the end of day. Team notices a wave pattern of requests trending downwards and erroring out, then spiking back up.

- 2023/01/31: Ceramic returns to problematic state. The team decides to switch to its own database for Passport instead of relying on Ceramic. The community is informed of the change and development begins to migrate Passport away from Ceramic as the primary database.

The Triggering Event or Root Cause of the Incident

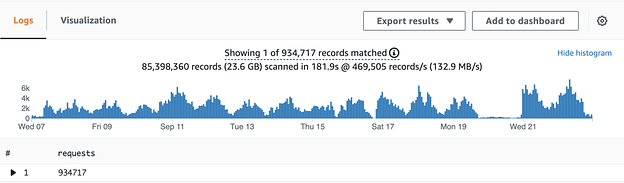

Gitcoin Passport requests on Ceramic in Gitcoin Grants Round 15, Source: Ceramic team

The incidents that occurred during the recent Passport app were a result of increased load on the Ceramic node. Although the total number of participating donors was lower in this round compared to Grants Round 15, the number of write requests to the Ceramic node was higher due to the fact that a solid Passport score (derived through verifying various stamps) was mandatory in order to receive matching, while in GR15 it was just a boost.

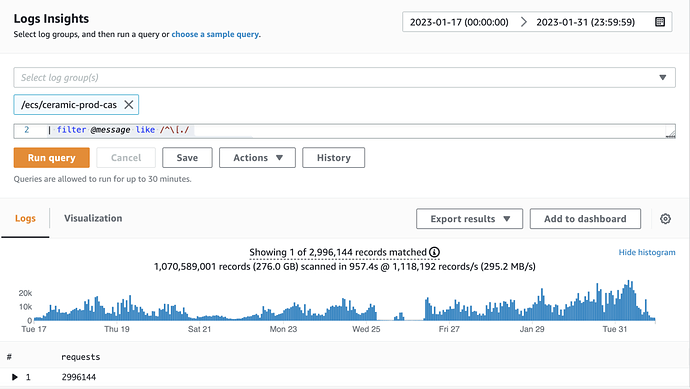

Gitcoin Passport requests on Ceramic in Gitcoin Allo Alpha Rounds, Source: Ceramic team

Despite efforts to resolve the issue by restarting the Ceramic node and its underlying IPFS nodes, the problem persisted. The Ceramic and Passport teams observed a wave pattern of failing requests trending downward and then spiking back up, but were unable to identify the root cause of the issue and corresponding fix during the round.

Customer Impact

The incident had a significant impact on Gitcoin’s brand, with many users expressing frustration on platforms like Twitter and the Gitcoin Discord. The user impact of the incident was that the Passport app was not working and loading properly, causing inconvenience for the users, especially for those participating in the Gitcoin Allo Alpha Rounds. The team worked to resolve the issue and communicate with the community about the changes being made.

Source: https://twitter.com/EnormousRage/status/1618922283829178369?s=20

Direct Outcomes

As a result of the ongoing issues with the Ceramic infrastructure, the team decided to migrate Passport to its own database instead of relying on Ceramic as the primary database. This change in plans allows users to verify their participation after the round ended within a grace period.

In other words, we have taken steps to migrate the Passport app and Scorer so that they read from and write to our centrally hosted database instead of Ceramic. We plan to work on migrating Passports to Ceramic in the background.

Preventive Measures in Future Rounds

To prevent similar issues from occurring in future rounds, we have identified the need for rigorous load testing to be conducted prior to a round to reveal potential issues with Passport. We are taking the following preventive measures:

- Relying on a new PostgreSQL instance for the Gitcoin Passport as the primary cache database.

- Exploring on-chain solutions for Passport to benefit from the reliable uptime and data availability on blockchains.

- The Ceramic team acknowledges the experienced instability and supports a primary cache database mitigation proposed as an intermediate solution. Meanwhile, Ceramic is committed to conducting rigorous load testing and making improvements to ensure the stability of the Ceramic system for future rounds. Ceramic is dedicated to the Gitcoin Passport mission and is determined to make Gitcoin Passport reliably composable across web3.

Risk and Mitigation Strategies

- Improve communication and attention to issues:

- Increase transparency by providing more frequent updates to the community about the status of Gitcoin Passport and any issues that arise.

- Enhance the monitoring and alert systems to quickly identify and address any potential issues.

- Implement a protocol for reviewing and addressing community feedback to improve Gitcoin Passport

- Ensure reliability and scalability of centrally hosted Gitcoin Passport database:

- Conduct regular audits and testing of the database to identify potential issues and ensure that it is functioning properly.

- Implement redundancy and backup measures (i.e., via Ceramic, on-chain solutions, and more) to minimize the risk of data loss.

- Evaluate the need for additional infrastructure and capacity to support future growth.

- Enhance collaboration with Ceramic team:

- Establish clear lines of communication and collaboration with the Ceramic team to prevent similar issues from occurring in the future.

- Regularly review and assess Gitcoin Passport’s architecture and infrastructure to ensure compatibility and reliability with the Ceramic network.

- Foster an ongoing dialogue with the Ceramic team to identify potential risks and vulnerabilities and develop appropriate mitigation strategies.