I’m not saying there hasn’t been proper communication in the process, I’m only trying to understand the possibility of a slimmer Option 4 but @ZER8 said there was not so that answered my question.

From an FDD standpoint, I’d say there isn’t a smaller amount we would be comfortable with as anything further would not be a unit serving a mandate, but Gitcoin could survive a round or two by hiring for the execution of a complicated task. (Most likely FDD contributors, but we would need to know exactly which tasks were requested.)

Option 5 = Less than option 4 - Hired guns

Gitcoin would survive (probably), but there would not be a workstream serving the mandate. The services could be hired from FDD contributors, but Vote for option 5 if this is your choice.

Advantages

- Very low cost

- Ability to replace autonomous workstream with service provider (No scope creep)

Disadvantages

- Lose insights and knowledge gained in the last year

- Fall behind the red team

- Potential for large losses for Gitcoin community - Probably not this round, but the following because the work isn’t being done to keep up with the evolving attacks

- Laying off people who have done good work for gitcoin

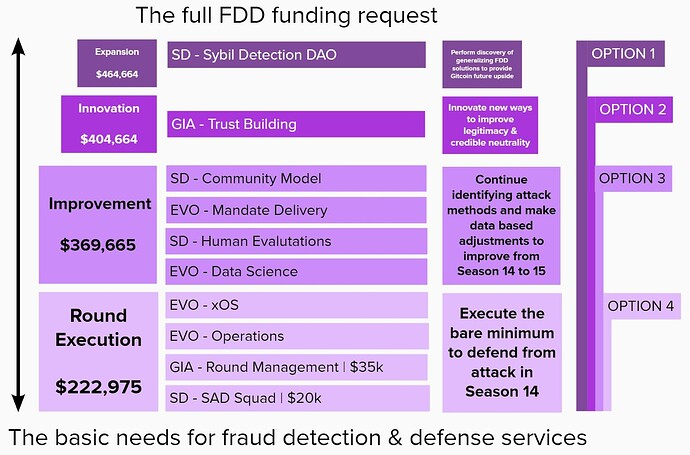

Option 4 - FDD Executes the round at bare minum

Advantage

- Keep FDD an autonomous unit in GitcoinDAO still working out how to best fulfill it’s mandate and serve Gitcoin’s mission

- Maintain knowledge and insights built over the last year

- Lean grants reviews

Disadvantage

- Lose ground gained against red team

- Insufficient funding to continue developing new models to serve grants 2.0 (Some will be done, but not sure how much can be done with the limited time and resources)

- Laying off people who have done good work for gitcoin

- Signal opportunity to attackers (Like announcing there won’t be a security guard on saturday night at a retail store)

Option 3 - Continue progress on classifying attack classes and mitigation techniques

Advantage

- Build on previous learning - Here is an example from new technique found in the last few weeks

- Develop new techniques in line with dPoPP & Project history grants 2.0 future

- Gain data on grants reviews to be able to scale Gitcoin rounds on grants 2.0

- Understands that the human evaluations are the ethical underpinning that removes bias from our algorithms. We want the algo to “think” like the community, not like the engineers that built it. Our humans in the loop process is cutting edge ethical AI and the decision to defund is to put profit ahead of purpose.

Disadvantage

- Spend more (Less than any other workstream - Is Sybil Defense and Grant Eligibility less important than every other outcome the DAO works to achieve?)

Option 2 - Continue building on work to decentralize grant eligibility reviews for all future communities using grants 2.0

Advantage

- In line with intended outcome of helping communities to better allocate their resources

- Shows an ethical difference between content moderation approaches in web 3 from web 2. Think of sybil defense as user moderation and grant eligibililty as content moderation. Did we learn anything about how not to do moderation from Twitter and Facebook?

- Potential reputation builder and proof-of-project history compliment

- Upside potential if generalized

Disadvantage

- Costs $35k

- Could fail

Option 1 - Allow FDD to set a precedent for how to spin out efforts when the positive externalities to the ecosystem align with GitcoinDAO’s mission, but exceed the mandate of the workstream.

Advantage

- Potentially create large upside for GitcoinDAO in ownership of the public infrastructure.

- Show how to build and launch public goods supporting protocols without VC funding

- Huge Aqueduct potential

- Why not build mutual grants with something we built and know works! Validation = See hop protocol sybil contest

- Creates a model for how to split off projects with upside when an autonomous workstream’s scope creeps, but the scope creep is in line with the overall mission of GitcoinDAO, but outside of it’s critical mandate.

Disadvantage

- Costs $60k

- Could fail

Over the last couple years the anti-fraud personnel and efforts of Gitcoin grew enough that it became a dedicated unique workstream of the Gitcoin Dao. In other words…the FDD was born.

The early years were full of excitement and potential, but this was not a permanent state. Like a classic confused teenager, the FDD had to experiment with identity. Finding/creating its identity in the rapidly-shifting environment of web3. During all this the FDD has protected its family, even as it brainstormed roadmaps to self-sufficiency and revenue-generation. The FDD was always mission-first. But we must now reconsider what that mission is.

Some believe the FDD scope has drifted away from the core mission. Before approving a budget they want assurances the FDD will continue to honour Gitcoin Dao priorities. I hear these valid concerns. I think most folks recognize the need for balance between mission & innovation.

The FDD has always been here to serve Gitcoin and this should continue. The FDD should focus on what the GitcoinDAO finds most important (every workstream should). The FDD is finding its dao IKIGAI just like an individual. Over the past 3-4 seasons I watched communication & coordination between workstreams improve dramatically. This helped everyone understand the big picture. A picture that now suggests low priorities should be paused/cancelled due to macro state.

The FDD is keen to tightly align with the Gitcoin Dao. If we came out of alignment it was completely unintentional. I am lifted by the idea the FDD can serve the dao even better! With the community’s input the FDD can identify and trim the fat. it is great that people care enough to speak up, and help the FDD understand and align with the Dao. We in the FDD are eager to boost Gitcoin Dao to the next level, however that looks.

There is no group better positioned to achieve greater anti-fraud results at Gitcoin.

There is no group with the solid history of the FDD and the technical partnerships needed to effectively protect Gitcoin.

There is no other group ready/interested to defend Gitcoin in the future. Gitcoin can be among the leading web3 anti-sybil/anti-fraud experts. This will happen via the FDD.

Some of the un-alignment accusations came as a surprise and the FDD should have a season to respond. If you think the scope of FDD drifted please give us the chance to demonstrate how aligned, lean and laser-focused we can be. Help us understand the dao-wide priorities so we can help the dao. Try to guide this crusty teenager before abandoning them. The results will be incredible.

Conclusion:

My preference is for option 2. I think the extreme reductions of option 4 are unprecedented and would sacrifice some of the significant innovations the FDD has been developing, namely a new decentralized review model (called Permissionless Reviewing) and the in-training Machine Learning system which is becoming more and more capable. Option 3 features even larger reductions than were first requested by Kyle.

Lastly, I want to recognize our unity. It is great to see so many dedicated and loyal folks committed to the long term success of Gitcoin. We are all on the same team here. Go team!

Thanks @DisruptionJoe and team for mapping this out. Dare I say, for the first time in a while I can actually understand the different funding options and the outcomes FDD would deliver with each. Huge kudos to the team for mapping out options in this way. I would really love to see more Workstreams approach this in S15.

I am supportive of Option 4 and on the fence on Option 3. The work to review grants, operate the rounds and offer an analysis after the round is valuable. I am still worried that some of the direction of FDD (model build out, approach to human evaluations and even the community model) may not be aligned long term with where I think FDD could be headed (this is just my opinion). So I will likely just stick to voting for option 4 for this season.

Thank you for the continued collaboration on moving this forward and taking all the steward comments into account and really taking the steward feedback on board. It’s been one of my highlights of this season to really see us all collaborating to find a middle ground for how we move forward.

I am wondering if there is a combination between these two? I would opt for Option 4 but I am forever wanting innovation vs stagnation so what would be a balance here where we continue iterating models (maybe it’s ONE per season?) for improvement but without a 140k price tag? It is not an easy or pleasant task scrutinizing to this level but perhaps a better or more clear definition of what " models for making round to round improvements" may mean or entail?

Thanks for the meaningful and direct feedback.

Let’s say you are a sybil attacker. You make a bunch of accounts Simona1, Simona2, Simona3… This is a clear signal, but we wouldn’t see the usernames being closely related without

- Noticing the behavior

- Turning the insight into a data problem - A model

- Creating a process for continually evaluating and updating the model

Here is the example of how we recently created another model for fighting off your attack: Fighting Simple Sybils: Levenshtein Distance - HackMD

Now we know how to detect it, but we need to either manual squelch those accounts or algorithmically do it. Algorithmic scales, manual does not.

To make this “feature” a part of the algorithmic detection we must go a step further. We can turn the detection of the behavior into a feature for the ml model. This is the overall algorithm that combines many features to determine if an account is sybil.

But what happens when the legit user “Simona69” gets caught in the heuristic? This is why we have humans in the loop. https://medium.com/vsinghbisen/what-is-human-in-the-loop-machine-learning-why-how-used-in-ai-60c7b44eb2c0

The humans will notice that Simona69 is not like the 68 before it. This info will then train the algo that the high quality signal DOES have exceptions.

The more humans that we have evaluate, the more opportunities for us to spot the algorithm being unfair to classes of users. The community model is finding new features and models to incorporate into what you might call a “meta-model” or ensemble which is best capable of accurately identifying sybil, and more importantly - NOT SYBIL accounts.

We have discussed moving the data science initiative to DAOops and maybe this is the time to do that. It helps FDD, but could be more beneficial to the DAO as a whole. The interesting part of connecting the group to FDD is that spotting sybil behavior is a lot like moving your Scrabble pieces around to see if you have a word. Sometimes we know exactly what data to request to build a feature, but spotting new behaviors is a mix of active analysis and serendipity.

We could lower the overall ask to $115k for the round to round improvements and ethical guardrails to be in place by transfering the data science budget to come from DAOops.

This is really interesting actually and a trajectory I am seeing in other DAOs also - I wonder if there is enough understanding of the possibilities of DS for the DAO that would warrant a “DS as a service” function to be accessed by workstreams ![]()

Thanks for this updated proposal Joe & team. I’ll just copy what simona wrote here because I wholeheartedly agree:

A quick question on this:

… are you saying here that you would be counting on DAOops to take over these roles? Or am I misinterpreting this? Because this is not really in our current scope.

This is also not something we could take on at this time I fear, data analysis that is crucial to the DAO at the moment is FDD related, so I would propose for it to remain with FDD.

On my vote, I am unsure how I will vote at this moment and looking forward to more comments from others. I’d definitely vote for innovation, same comments as Simona.

Thanks for all your work on this, I know this is a tough process but it’s making our DAO more transparent + resilient and us all better at giving and receiving feedback. Appreciate all you do.

Nope. Saying that the role exists because DAOops requested us to have someone in that role. It shouldn’t be by storyteller though!

Option 3 is focused on improvements, but not necessarily innovation. Options 1 & 2 have innovation. The difference being that they are creating new value or systems rather than continually improving or tweaking the ones we have.

Based on your comment, I think you are most aligned with Option 3. ![]()

I think this is very insightful for anyone considering options 1 & 2 or looking at FDD as future drivers of dPoPP adoption. [S14 Proposal] FDD Season 14 Budget Request - #50 by danlessa

Hello,

I want to echo other people’s comments and thank you for taking the time to incorporate feedback from the stewards in the budget proposals.

Before talking at all about the current options let me re-iterate the feedback I tried to give in the google doc you had DMed me which seems to have evolved to become this proposal.

What I would like to see come out of the FDD WG is an algorithmic software solution for evaluation of grants and sybil detection. With minimal human input. I do understand that human input will always be needed at some point but it should be kept at a minimum as it does not scale.

For simple manual reviews of grants which is what I understand Option 4 to be I think even Option 4 is very expensive. Manual review and classification of grants is a very simple work that can be accomplished even by someone from Upwork for $10/hr so to say.

Instead, and please please correct me as I may be understanding the budget wrong, what I see is:

A request of $222,975 for 4.8 months (3 months + 60% reserves) for 5 full time contributors.

That translates to a 222975 / 5 / 4.8 → $9290.625 per month for each employee to do “the bare minimum” which is what I understand is manual reviews.

This is an insane monthly salary for just manually reviewing grants.

That said what I want to see from FDD is to focus and iterate on delivering a software solution combined with minimal human input. Can you guys pull that off? So far I have not seen the most encouraging results.

I see lots of hard to understand jargon and no software yet.

I am at the moment not sure what to vote. I would also want to see what other stewards say.

![]() This is a very funny situation. Option 4 doesn’t mean only grant reviewing. On that logic everything that the other workstream do can be outsourced via Upwork? Am I wrong to assume this? I thought this was a DAO or is it a CAO in which we call it a DAO, but it’s just a corporation that’s online 24/7 ? Is that the vision? I don’t think so…

This is a very funny situation. Option 4 doesn’t mean only grant reviewing. On that logic everything that the other workstream do can be outsourced via Upwork? Am I wrong to assume this? I thought this was a DAO or is it a CAO in which we call it a DAO, but it’s just a corporation that’s online 24/7 ? Is that the vision? I don’t think so…

The FDD has multiple algorithmic solutions and the [Reward system] (GIA Rewards OKR Report) ( includes grant reviewers and is only one of the solutions).

The total cost of the grant reviews was exactly 14k last season and we protected millions of dollars of funds(more from an eligibility POV) . Do you think it’s expensive to do manual grant ?

reviews? ![]()

I don’t think you actually know the issues/challenges we face as the FDD and even more so in the GIA which is in charge of grant reviewing. 14K was the cost of 12.000-15.000 reviews! The FDD does not mean grant reviewing…

We could hire people from upwork but that will cost us even more…We will need to train each of them…takes a season or two. We actually pay our reviewers with 10-20$ per hour atm… On another note I really thought we had the ethos of decentralization here, but like reading your comments I kinda see through that “mirage”. Even the way in which we recruited the reviewers was fair and open for everyone. We recruited them chronologically in the order they reached out to us… and we wanted to keep things neutral and fair even within our squads.

Reading your comment is like all my work within the FDD for the past three-six months was null and I can’t be happy about that… Why are stewards not even looking at what we are building and just “hating” on us? Is that fair for the contributors here? Is that fair towards me? Who worked 60+ hours a week and 80+ during the round to make sure the grant creators, our donors and our matching funds are protected?(4 real, you can look in our Discord during the round and see what’s up). Associating the FDD with grant reviews nullifies all the hard work done by my peers.

If you really care about Gitcoin DAO, the grants program and our community please try to understand what major issues we face …we have entire organizations trying to game the system, we have hundreds of people that are creating fake grants…all while running the round, ensuring sybil protection and trying to solve “unsolved” research problems…

Those people are not reviewing grants, I am the driver of Round Management, only my squad handles grant reviewing ![]() and as I stated above that costs 10-20k MAX per round. This round we actually want to reduce that number with 50%, which is not fair for our contributors, but we need to reach a balance that satisfies the DAO.

and as I stated above that costs 10-20k MAX per round. This round we actually want to reduce that number with 50%, which is not fair for our contributors, but we need to reach a balance that satisfies the DAO.

That’s your truth, but is it the truth? ![]()

We can have a call anytime (takes 10 minutes) and I can explain and walk you though the whole process of grant reviewing and why we opted for this system which works amazingly btw ![]()

I’m sure that @DisruptionJoe @omnianalytics @Sirlupinwatson @David_Dyor and @vogue20033 can explain more about our collective efforts.

PS. I know and I completely agree what the DAO has some BIG issue. Parasitic behaviors are the threat to any organisation… The FDD is not the biggest spender and we work on one of the most complicated and complex set of problems. I don’t believe that by cutting our budget we will do the DAO a favor ![]() If people vote for Option 3 which is less than 400k they will see that we could and probably will continue to save funds(from our donors and matching funds) that are more than that number. Have a good day ser

If people vote for Option 3 which is less than 400k they will see that we could and probably will continue to save funds(from our donors and matching funds) that are more than that number. Have a good day ser ![]()

Hi there! In short,

Sorry about that, we will try to simplify the literature that we are using so you can understand what we are working on and what we are doing/trying to achieve.

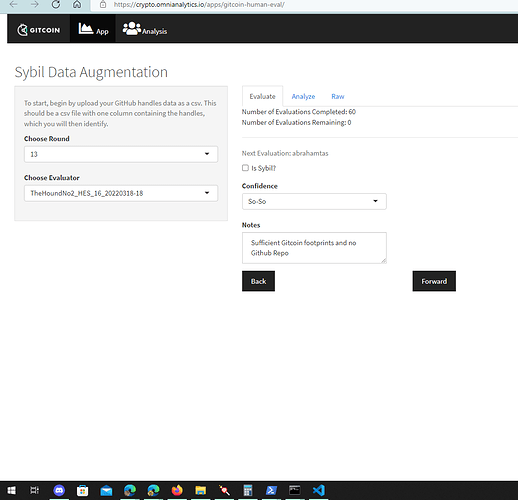

Fundamentally, we have two working algorithm for Sybil Detection classification and one application that can automatically run prediction/analysis from the human-in-the-loop inputs (Human Evaluations)

“ASOP” → Anti-Sybil Operationalized Process or “SAD” Fraud-Detection-and-Defense (github.com)

Explained by @danlessa in depth here

Software (You will need a username and a passcode to see it, if you are interested let me know)

@omnianalytics

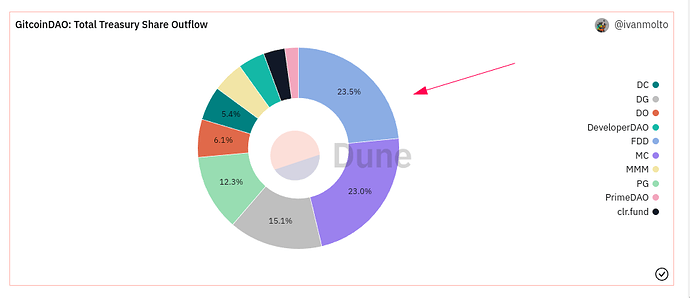

The FDD is the biggest spender. (source: https://dune.com/ivanmolto/Gitcoin-DAO)

Those people are not reviewing grants, I am the driver of Round Management, only my squad handles grant reviewing

and as I stated above that costs 10-20k MAX per round. This round we actually want to reduce that number with 50%, which is not fair for our contributors, but we need to reach a balance that satisfies the DAO.

Please describe in detail to me then what “bare minimum” is and where each part of the requested $222,975 goes where so I can make an informed decision.

You seem to have some kind of misunderstanding that it’s my or other steward’s fault for not understanding your problems or for not diving deep enough into what you are doing.

I am getting paid nothing to do this. You are asking money from the DAO and I am asked to say yes or no. If you want a yes you will have to keep it simple, keep it very short and explain where the money goes in enough detail so I can make an informed decision if the ask is too much or not.

If you give me to read very long essays I will simply default to saying no.

Thank you Armin.

The link to Github is broken. I assume because the repository is private?

https://github.com/Fraud-Detection-and-Defense/CASM <— this one.

Software (You will need a username and a passcode to see it, if you are interested let me know)

Yes that would be cool to try it out. Good idea. But first I would like to see code. I am a developer so that’s what I can judge best.

Yes, these are private repo, you can request an access to @omnianalytics (OmniAnalytics#5482) on discord, just send him your GitHub username then he can add you in the group.

For the “ASOP” “SAD” @DisruptionJoe can add you in the group as well.

I will do that. But why private? Since we are all about opensource software here, I would expect what the DAO produces to be opensource too.

The repos contain sensitive information, like the specific features being used for sybil detection and how they’re retrieved. Making those open source will make obvious to the attackers to what look into in order to avoid detection. I don’t see a hard blocker for opening it up, but this should be part of an involved discussion (which FDD is already doing a lot) and the precautionary principle should apply.

Also, the SAD codebase is as of now a monolithic piece of software, which makes not trivial to separate the sensitive stuff into separate repos. It’s definitely doable as a part of the FDD evolution to develop into that, and opting for increasing community involvement on Sybil Detection will definitely make that highly desirable.

I was talking about this season ![]()

I understand, I’m actually upset because I’m sure we both have Gitcoins DAO best interest at hearth, Our solutions are not that easy to comprehend because the threats are the same.

Maybe next season there could be a Steward Budget call in which all the workstreams present their needs and accomplishments with their previous budget ![]()

The simplest way of seeing what we are doing is the diagram: