![]()

This could be accomplished by exposing a grants subgraph that shows contributions from each wallet address and the desoc data associated with that wallet.

For example:

query {

donors(

where: {

grantRound_in: ["GR14"]

}

) {

id

contributions {

grant

amount

}

desoc {

credentials {

name

verificationDate

}

nfts {

id

mintDate

}

poaps {

id

mintDate

}

lens {

numFollowers

numFollowing

}

poh {

profileCreatedDate

}

}

}

}

Critically, the API does not show an actual score, just the underlying data needed to build a scoring model.

Developers in the community could create their own scoring algorithms from this data, resulting in a common output, eg, {"wallet": "0x..", "sybilScore": 0.87, "squelch": true} for a list of wallets.

Developers could also complement the Gitcoin graph with other desoc data from on-chain sources. For example, verses.xyz signatures on Arweave.

FDD could backcheck a proportion of the cases where community algorithms do not achieve consensus. This may require some calibration of the sybilScore threshold that triggers a squelch, eg, a more promiscuous algorithm might squelch only 1% of accounts, whereas a tougher one might squelch 10%.

The community’s actual Sybil scores + data from past rounds could be exposed (perhaps hiding wallet addresses) for Kaggle style competitions. This would be a great way for data scientists to learn about the shape of the data and test the performance of their models against others.

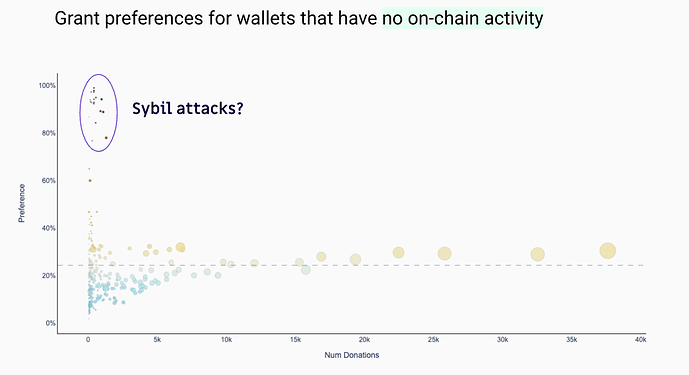

FWIW, I’m doing some work with FDD on this at the moment. It’s a fun dataset and there are some pretty clear signals. For example, the chart below shows a cluster of grants that are strongly preferred by wallets that have no on-chain credentials.