I, too, would like to see a less steeper curve. I am not in favor of any form of taxation, yet. This likely deserves a separate thread since it is independent of GG18 results.

My concern with a direct intervention like taxation is that it leaves endemic issues driving inequity unaddressed and dampens the effectiveness of taxation. Moreover, there is a risk that taxation diminishes input signals that are performance-based, such as grantees who show up round after round sharing their impact and raising support from the community will have a disproportionate share of the funding for the right reasons.

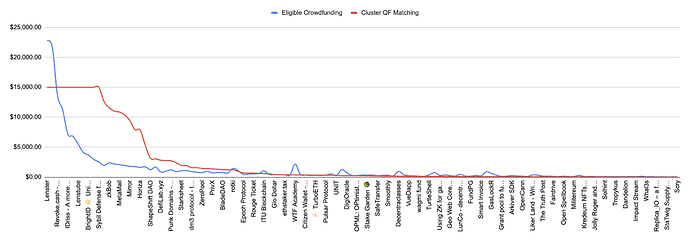

Viewing the allocation of the funding pool in the context of respective eligible crowdfunding contributions adds more to the picture. The top 15 projects in the web3 OSS round received a share of 67.5% of eligible contributions and were allocated 70.3% of the funding pool.

However, I would still support the case for a less steeper curve. Here are not-so-exhaustive measures, which if they don’t make a dent, make the case for taxation stronger.

-

Improve discoverability of grantees on the platform who may not have as strong a marketing muscle as larger projects (a lot is happening in this direction already, also shameless self plug for AI-driven discoverability is here)

-

Find ecosystem partners who can pool funds to run dedicated feature rounds exclusively for smaller projects (based on consensus on definition) similar to opportunities that accelerators and seed-stage funding offer to young start-ups.

-

Run QF with weighted votes for subject matter expertise so smaller projects making large strides can see the gains in allocation based on curation from people in-the-know (Token Engineering Commons already did this in a feature round here).

-

Integrate with a protocol like Hypercerts where firstly, the proof of impact, and then, evaluations, help divert dollars where the action is (food for thought here)

Unfortunately, none of these are silver bullets that will change things overnight, but I am hopeful that ease of discoverability can make an impact in the near term.