GR13 Governance Brief

Grants Round 13 has been a monumental round for improving the community ownership, legitimacy, and credible neutrality of our systems. This post discusses how the Fraud Detection & Defense workstream (FDD) has worked to stay ahead in the red team versus blue team game. It also documents the decisions and reasons behind our subjective judgements. This workstream is tasked with minimizing the effect of these liabilities on the community.

Sybil Defenders

Sybil Detection Improvements

FDD is responsible for detecting & deterring potential sybil accounts. In the past we have done this using a semi-supervised reinforcement machine learning algorithm run by BlockScience. This season we transferred the ownership of the Anti-Sybil Operationalized Process to FDD with contributors running it end-to-end.

During Season 13 we identified multiple new behaviors by the sybil attackers. Part of this was made possible by our Matrix squad. The squad developed a classification of all sybil behaviors and began to challenge assumptions held in the current process. Another effort helped us to understand the nuances behind the behaviors such as “airdrop farming” or “donation recycling”.

GR13 also saw the first run of our “community model”, a second algorithm built from scratch to test and improve the Blockscience built ASOP model. FDD intends to use the newly developed community model and the ASOP model in the future, potentially as an ensemble with the human evaluations.

The human evaluators, commonly referred to as “sybil hunters”, are key to the system. Not only do they help train the system while actively providing inputs, they also provide statistical validation for the model. Humans-in-the-loop (HITL) machine learning combined with rewards for evaluators allows us to decentralize the inputs to the system. This means the system “thinks” like the community, not the engineers that built it.

GR13 saw an increase in the number of human evaluations by 100% with the cost going down by 300%!

- GR10 - Core team does all evaluations

- GR11 = $1.25/eval | $1,750 for 1,400 evaluations by 8 contributors

- First DAO led evaluations - Probably higher cost due to expert time from core team and SMEs for help

- Fairly low quality, little training done

- GR12 = $4.39/eval | $26,350 for 6,000 evaluations by 25 contributors

- Opened up participation to all GitcoinDAO contributors

- First inter-reviewer reliability analysis to improve inputs

- Focused on improving quality of data entering the system

- Higher cost to get the inputs right and attract new people to participate

- GR13 = $1.42/eval | $17,050 for 12,000 evaluations by 37 contributors

- Second time “sybil hunters” improve quality

- Established systems for recruiting and executing

- Focused on improving quality while lowering cost/eval

- Brought out the meme culture in FDD

GR13 Sybil Incidence & Flagging

Blockscience GR13 Statistical Review

A total of 11.9% of the Gitcoin users making donation in GR13 were flagged during R13. The Sybil Incidence during this round is significantly lower than R12, with an estimate of being approximately 70% of it was before.

The Flagging Efficiency was 84% (lower boundary: 77% and upper boundary: 93%) which means that the combined process is under flagging sybils compared to what humans would do.

Please note that some metrics are followed by a confidence interval in brackets, in keeping with statistical analysis practices.

GR13

- Estimated Sybil Incidence: 14.1% +/-1.3% (95% CI)

- Estimated # of Sybil Users: 2453 (between 2227 and 2680 w/ 95% CI)

- Number of Flagged Users: 2071

- % of flags due to humans: 951

- % of flags due to heuristics: 1067

- % of flags due to algorithms: 53

- Total contributions flagged: TBD

- Estimated Flagging Per Incidence: 84%

GR12

- Estimated Sybil Incidence: 16.4% (between 14.5% and 18.3%)

- Number of Flagged Users: 8100 (27.9% of total)

- % of flags due to humans: 19.4%

- % of flags due to heuristics: 34.7%

- % of flags due to algorithms: 49.2%

- Total contributions flagged: 115k (21.7% of total)

- Estimated Flagging Per Incidence: 170% (between 118% and 249%)

GR11

- Estimated Sybil Incidence: 6.4% (between 3.6% and 9.3%)

- Number of Flagged Users: 853 (5.3% of total)

- % of flags due to humans: 46.1%

- % of flags due to heuristics 14.3%

- % of flags due to algorithms: 39.6%

- % of total contributions flagged: 29.3k (6.6% of total)

- Estimated Flagging Per Incidence: 83% (between 57% and 147%)

Compared to GR12, we saw a significant decrease in sybil movements. If we assume the $1.4 million in individual donations came from ½ the number of total donors which donated over $3 million in GR12, we still have a significant drop in sybil behavior.

According to human evaluation statistics, it has increased by a factor of 2.6x (between 1.6x and 5.0x). This was matched by a more than proportional response through flagged users, which seems to be “over efficient”. The interpretation of this is that the combo of using human evaluations, heuristics, squelches and algorithms is generating more flags than if we did flag the entire dataset of users using humans only.

GR13 Sybil Detection Details

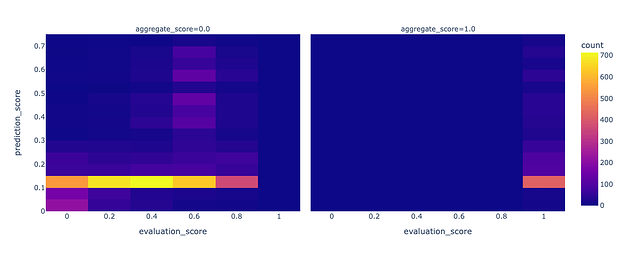

Gitcoin Grants sybil detection depends on three fundamental inputs: survey answers as provided by the human evaluator squads, Blockscience provided heuristics, and a ML model that uses the previous two pieces in order to fill in “scores” of how likely an user is or is not sybil. With those pieces available, it is possible to compute an “aggregate score” that decides if a user is sybil or not.

This aggregate score depends on a prioritization rule which works as follows:

- Has a user been evaluated by a human? If yes, and if his score is 1.0 (is_sybil = True & confidence = high), then flag it. If the score is 0 or 0.2, then do not flag.

- Else, has the user been evaluated by a heuristic? If yes, then simply use whenever score has been attributed

- For all remaining users, use the flag as evaluated by the ML prediction score.

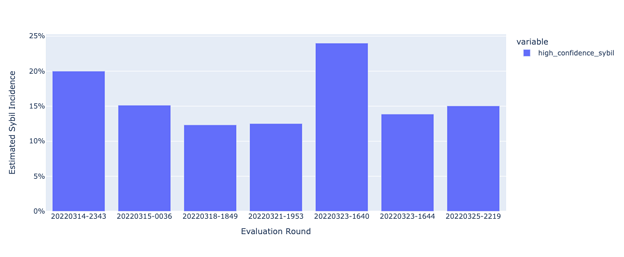

GR13 is the second time that we’re able to have an indirect proxy about how sybil incidence evolves during the round. We increased the number of human evaluations from 6,000 to 12,000. The estimated sybil incidence on each round is illustrated on the following figure.

Notice the spike in human evaluators catching sybils during the fifth round. This may be a sign that the evaluators were learning from each other about a newly discovered behavior present in this round. The behavior included a video from user Imeosgo.

rss3空投数量可以查询了,我可以领几万块钱。去年领了好几个项目,几十万到手

The user encourages people to create sybil accounts and donate to grants to receive airdrops. (Post on Airdrop Farming in the works!) This hypothesis is dually supported by traffic spikes from China during this timeframe as well.

While these anecdotes are intuitive, FDD will work to invalidate the hypothesis between rounds and use the information to continue improving Gitcoin’s fraud detection & defense effort…

Grants Intelligence Agency

The GIA evolved from a couple of initiatives and workgroups within the FDD two months before GR13 began. It has four squads: Development, Review Quality, Policy and Rewards. The main goal of the GIA is to handle grant reviews and appeals. While executing on the current round is the primary goal, decentralizing and opening up our processes to the community is also of high importance.

.

Grant eligibility is handled by a collaboration of multiple working groups. They review new grant applications and any grants which are flagged by the community. The grant review process is also continually being decentralized. Feedback from the process feeds into the policy via an English common law method.

When the stewards ratify the round results, they are also approving the sanctions adjudicated by the FDD workstream.

Full transparency to the community* is available at:

- Grant Approvals - When new grant applications are received

- Grant Disputes - When a grant is “flagged” by a user

- Grant Appeals - When a grant denied eligibility argues an incorrect judgment

*User Actions & Reviews is currently in “open review” allowing for select participation to stewards due to sensitive Personal Identifiable Information (PII) data and potential vulnerability to counter-attacks.

Additional transparency for all flags is provided at @gitcoindisputes Twitter.

FDD is dependent on the Gitcoin Holdings team for a few operational needs including some technical infrastructure, administrative access, and tagging of grants for inclusion in the eco & cause rounds. The coordination and communication between FDD | GIA, Gitcoin Holdings, PGF | Grants Operations squad, and DAOops | Support squad needs to continue to evolve and improve for matters related to grant eligibility.

New Grant Application Review Process

Between GR12 and GR13, FDD shifted to an open grant review period beginning 1 month before the round and ending 1 week into it. The Grant Review Quality squad in FDD is responsible for ensuring that reviews are handled in a timely manner and that the reviews are of a high quality.

Before the round began the Grant Review Quality squad ensured that the grant application backlog was reviewed and all new grants were approved/denied before February 11th, 2022. During the round, the grant approvers hit their goal of approving grants in under 48 hours or less (except for the weekends).

Due to complications with getting data from Gitcoin Holdings we were unable to use our newly developed tool to help scale the grant review efforts. Luckily, FDD had approved a review quality budget which contained a “plan B” for grant reviews to use the previous system. (Which cost the DAO a significant amount!)

A birds eye view of the progress in grant reviews

- GR10 |

- 3 Reviewers / NA cost

- First time using outside reviewers

- FDD did not have a budget yet! Volunteer help.

- GR11 | 7 Reviewers / $10,000

- Opened up to the community even more

- Multiple payments models tested

- GR12 | 8 Reviewers / $14,133 (1 approver - Joe)

- Experimented with two grant review squads

- Recruited more community members and started to focus on quality

- GR13 | $5.38 cost per review & 2.3 average reviews per grant

- 2,300 Reviews / 1000 Grants (Duplication error made this an estimate)

- 7 Reviewers / $12,380 (2 approvers -Joe, Zer8)

- The main focus was balancing between high quality reviews and number of reviews

- Working towards Ethelo integration, senior grant reviewers => Trusted seed reviewers

The experiment run in GR12 using Ethelo for the Grant Disputes not only provided the same outcomes as the former review process, it garnered 53 reviewers in 7 days. Of the reviewers to use the system, 65% reviewed all of the grants presented. All this for the reward of a POAP! By using the Ethelo system in the future, a cost savings is likely.

Grants Disputes & Appeals

The policy squad exists to create and maintain policies affecting platform use and grant round participation. They set definitions based on reviewer feedback and advise on judgements for flags, disputes, appeals, and sanctions during the round.

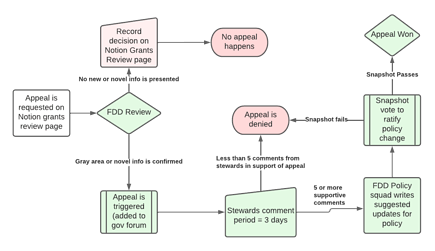

During GR13, a discussion on the governance forum included analysis and recommendations for a grant eligibility policy change triggered by the BrightID appeal. This discussion found the need for policy to be an iterative process informed by the public goods workstream and the community as well as FDD. Should Gitcoin model its authority like English common law? What alternatives exist?

The outcome of the BrightID appeal was found using a well thought-out process which needed more education and validation from the community to execute. This is the process we had recommended prior to BrightID being the first appeal to make it to the level of needing to change a policy!

However, the collaboration between FDD & PGF Grants Ops showed us that the snapshot vote was unnecessary at this level. The process ended up playing out in these steps:

- BrightID submits their request for appeal

- The FDD source council hears the case as an appellate judge would to determine if the appeal has merit. In this case, they found it to be a novel situation.

- The appeal was then posted to the governance forum for discussion. The FDD recommended a steward vote for legitimacy of the process, but the post did not get 5 stewards commenting in approval of moving it to a vote.

- FDD then went with its judgment and reactivated the grant. (Technically, the grant was reactivated before the round to participate rather than “being in jail while awaiting trial.)

The first appeal to require a policy shift produced deep discussion & general agreement about how the dao should process appeals and found ways to increase the decentralization of the entire process. The real question was which appeals should reach a Steward vote, and which can be disposed of by (currently) the FDD or a decentralized group?

While the policy change suggestion did not proceed to a Snapshot vote it resulted in progress for Gitcoin. It also involved suggestions of market cap limitation for projects with governance tokens whereas presently, projects with tokens get denied. The policy change itself was deferred to a time after the grant round.

Gitcoin Grants policy is currently held on a “living document” which can be found on the new Knowledge base which was recently installed at support.gitcoin.co. This new open source Gitbook instance, which is maintained by the support squad, replaces the previous closed source Happyfox knowledge base.

FDD Statement on GR13

The sybil attacks slowed down this round, but they are not stopping. They are evolving new and more complex tactics which require FDD’s best effort to defend. Last round we commented on our dependence on Gitcoin Holdings to supply new sources of data outside of what we are whitelisted to access.

FDD requires an appropriate level of data access to quickly respond to new red team strategies. The blue team is at a significant disadvantage when we must wait months to get approval to access the data needed to defend against new styles and classes of attack.

Simply getting the publicly available data about grants to integrate into our review software took the entire time between GR12 and GR13 due to legal concerns. The issue was finally solved for us on 3/11, two days after the start of GR13. We could have redirected the current integration which sends information to Notion. (Not to mention we lost the testing opportunity and all the learning we would have gotten by using the fully operational software which only needed the integration to avoid manual inputting of the data. This issue cost the DAO around $10-15k.)

We currently have two open data requests which have both been open for over a month. How is the Fraud Detection & Defense workstream supposed to function properly when we cannot access data in a dynamic way which allows us to respond to new behaviors?

We again encourage Gitcoin Holdings to make ALL data that isn’t legally protected available to the FDD workstream. Our data storage layer squad can work with Gitcoin Holdings engineering to set up a warehouse with proper roles and permissioning for DAO contributors and the public. Please help us to innovate further and faster.

Disruption Joe, FDD Workstream Lead