With inputs from: Carl Cervone, David Gasquez, Rashmi Abbigeri, Sam McCarthy, Sov, Umar Khan

This report is part of Gitcoin Grants 24’s Strategic Sensemaking Framework, an initiative designed to identify Ethereum’s most meaningful, urgent, and solvable problems through structured community research.

One-minute Summary

Ethereum is scaling rapidly, but fragmentation, opaque data, and siloed coordination threaten its ability to function as a unified network. This report proposes a funding domain for open data infrastructure—the explorers, analytics, standards, and metrics that allow anyone, inside or outside the ecosystem, to verify growth, adoption, and impact. In traditional finance, data is often paywalled and proprietary; in Ethereum, it should remain open, credible, and accessible to all.

Ethereum’s public, verifiable data is one of its strongest differentiators and a key selling point for builders, institutions, and policymakers. However, today it remains underleveraged.

Even though Ethereum’s raw data is open, much of the usable data layer is funneled through proprietary platforms that dominate usage and market share. Early successes from projects like Blockscout, DefiLlama, growthepie, and L2Beat show the influence that credible, open data can have in shaping narratives, guiding capital, and building trust.

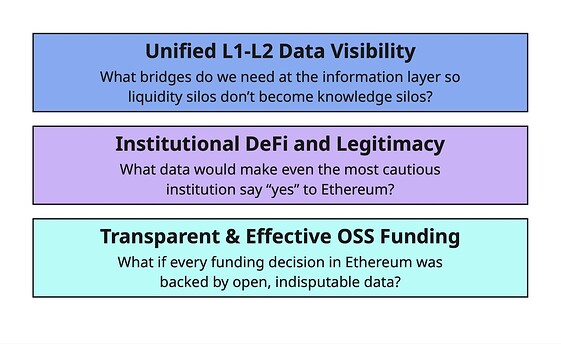

Drawing on cross-ecosystem research, adoption data, and community insights, we map 20+ use cases into a strategic taxonomy linked to three core opportunities for 2025: unified L1–L2 data visibility, institutional DeFi legitimacy, and transparent OSS funding. The goal: direct capital toward closing the most critical coordination gaps and ensure Ethereum’s open data infrastructure strengthens its credibility and resilience for the long term.

Problem: Scaling Success, New Coordination Risks

What specific Ethereum problem are you addressing? Why is this urgent and significant right now? What evidence supports the importance of this problem?

Projects like Blockscout, DefiLlama, growthepie, and L2Beat have shown what’s possible when Ethereum’s data is open, verifiable, and accessible to all. These projects have become indispensable references across the ecosystem powering analysis, informing governance, and shaping narratives without relying on gated or paywalled business models. Yet, despite blockchain data being technically open, most of it remains hard to access and use meaningfully without depending on proprietary platforms and centralized RPCs.

Ethereum in 2025 faces a pivotal moment. Scaling via rollups has succeeded, but it has also brought fragmentation, coordination challenges, and growing reliance on centralized infrastructure. The urgency is compounded by the rapid growth of L2s, DAOs, DeFi protocols, and applications generating increasingly complex datasets. Too often, ecosystem decisions on funding, governance, and protocol development still rely on anecdotal signals rather than verifiable evidence. This is a gap Vitalik Buterin has warned about in his critique of public goods funding being driven by “social desirability bias.”

Ethereum’s public, verifiable data is one of its strongest differentiators and a key selling point for builders, institutions, and policymakers. However, today it remains underleveraged. The success of the open data role models is proof of what’s possible but they are still the exception.

How might we attract and support the next wave of builders who can scale these successes into a richer, more connected Ethereum data ecosystem?

Impact Potential: Three Core Opportunities Built on Robus Measurement

If open data is to become one of Ethereum’s flagship strengths, robust measurement must form the foundation. Without credible, standardized, and context-rich metrics, even the best explorers, dashboards, or data standards risk becoming noise instead of actionable signal.

Robust measurement means:

- Consistency – Shared baselines (e.g., active users, protocol integrations) that allow progress to be compared meaningfully across domains.

- Context – Vertical-specific metrics that capture local impact (e.g., validator coverage, DAO proposal participation) alongside network-wide data.

- Integrity – Transparent methodologies and verifiable sources, so that numbers are trusted both inside and outside the ecosystem.

While other chains may move faster by sacrificing decentralization or governance depth, Ethereum’s edge is moving with both speed and credibility, proving its health, growth, and impact with data that is open, verifiable, and actionable.

With that foundation in place, open data infrastructure can unlock three core opportunities in 2025:

-

Unified L1-L2 Data Visibility: Ethereum’s growth now spans dozens of active Layer-2 networks, each generating its own transaction, liquidity, and governance data. Without aggregation, this activity remains siloed, making it hard to see the ecosystem as a whole. The demand for cross-network standards (e.g., ERC-7683 for unified cross-L2 transactions) signals a clear need for integration not just at the transaction layer, but at the information layer. Open data infrastructure that unifies L1 and L2 metrics would let the community, builders, and external observers track adoption, usage, and health across the entire Ethereum stack. Even if liquidity remains fragmented, information should not.

-

Institutional DeFi and Legitimacy: Billions of dollars of patient capital could flow into the ecosystem if we demonstrate robust risk analytics, real-time monitoring, and trustworthy data onchain. Providing rich, open data (on trading volumes, liquidity, collateralization, DAO treasury holdings, etc.) can give institutions and regulators confidence. By funding open analytics and data infrastructure now, Ethereum positions itself as the most transparent and analyzable financial system in the world, attracting institutional adoption while staying true to decentralization.

-

Transparent and Effective OSS Funding: The coming years present an opportunity to scale capital allocation experiments into a core strength of Ethereum’s ecosystem. Importantly, such funding needs robust data to be effective: we need to measure impact, track contributions, and hold recipients accountable in the open. In essence, open data enables evidence-based governance. It replaces guesswork or politics with objective metrics, leading to smarter grant allocations, protocol upgrades guided by real usage patterns, and DAO votes aligned with onchain reality. The result is a more adaptive and efficient ecosystem, allocating resources where they have the highest impact.

Why Open Data Tooling is the Key Enabler

When we view Ethereum as an interconnected ecosystem of data flows rather than a single chain, the key question becomes: where should we invest in open data to create the greatest impact?

Open data tooling, from block explorers and standardized data models to public analytics dashboards, robust impact metrics, and funding trackers, is the connective tissue that holds a decentralized ecosystem together. These tools empower the community to make sense of a complex multi-chain landscape, coordinate decisions, and uphold transparency and trust.

In their absence, Ethereum risks becoming opaque, brittle, and fragmented, falling behind other chains that offer faster, more seamless user experiences. Open data tooling is what enables Ethereum to move with efficiency and speed while staying true to its founding values: empowering the community to see clearly, govern wisely, and act with confidence.

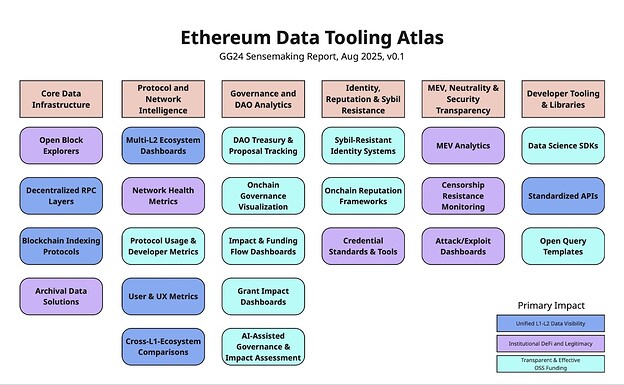

The Ethereum Data Tooling Atlas: Mapping the Open Data Landscape

The above taxonomy organizes existing and potential tools into six verticals, each mapped to the primary opportunity it most impacts. This serves as a blueprint for targeted funding closing the most critical coordination gaps and aligning Ethereum’s data infrastructure with its strategic future.

But an atlas is only as accurate as the perspectives that shape it. We need community input:

What opportunities are we missing? Which tools and use cases deserve more attention? What belongs here, and what should be excluded?

By treating this as a living sensemaking exercise, we can elevate the Atlas from a static map into a dynamic navigation tool that reflects the collective intelligence of the Ethereum ecosystem and guides where our energies and investments should flow.

Sensemaking Analysis

What sensemaking tools did you use? What sources did you use for this analysis? How did you aggregate your data/findings?

The analysis draws from primary research into Ethereum’s data tooling ecosystem, cross-chain public goods models, funding trends, and ecosystem interviews.

For instance, multiple chains (e.g., Polkadot and Cosmos) are funding public dashboards to monitor cross-chain health. Notably, Polkadot allocated over $1 million from its treasury in 2024 to fund explorers and indexing tools. Cosmos’s “Map of Zones” dashboard visualizes cross-chain activity across 100+ chains. We also compared how open tools (Dune, Blockscout) perform versus closed systems in accessibility, redundancy, and community trust.

The core synthesis emerged through the construction of a structured visual taxonomy. This framework mapped 20+ distinct open data use cases across six major verticals as outlined above. Each of these categories was evaluated through the lens of three converging inflection points in Ethereum’s evolution: Unified L1–L2 Data Visibility, Institutional DeFi & Legitimacy, Open Source Funding at Scale.

Sources included Open Source Observer adoption data, DeepDAO’s treasury monitors, Gitcoin grant rounds, and dashboards from Dune, L2Beat, and Blockscout. Key thought leadership came from Vitalik’s essays on credible neutrality, alignment, the “Scourge” roadmap phase, and Kevin Owocki’s reflections on meaning awareness. Community threads on ETHResearch, Optimism forums, and Arbitrum DAO provided qualitative insight into what developers and funders actually use.

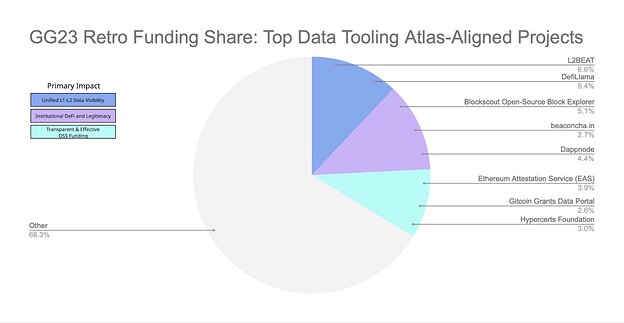

Data triangulation revealed Gitcoin as a unique validator of demand. Tools like Open Source Observer and Blockscout were not only widely adopted, but also consistently funded through matching pools. Notably, 8 of the top 30 projects selected in GG23 Mature Builders Retro Funding round based on data-driven, metrics-based rankings aligned with use cases identified in the taxonomy and collectively received a third of the retro funding pool from badgeholders.

In short, both qualitative (forum debates, grantee feedback) and quantitative (donation totals, usage stats) signals converged to identify a high-leverage opportunity: fund a domain that can unify fractured data efforts and give the ecosystem shared visibility across layers, governance, and funding. Without this, Ethereum risks data opacity at a time when trust, accountability, and interoperability matter more than ever.

Gitcoin’s Unique Role & Fundraising

How can Gitcoin uniquely help solve this problem? Why does a network better solve this than by existing organizations?

Gitcoin is uniquely positioned to address this challenge as a decentralized, community-aligned platform purpose-built to fund digital public goods. Traditional institutions hesitate to fund tools that don’t offer direct returns. Gitcoin’s strength lies in convening fragmented stakeholders across developers, donors, L2 teams, and researchers around a shared vision for open tooling. Gitcoin’s networked model where users, DAOs, and sponsors collectively fund what they value has already supported domain-aligned projects like Beaconcha.in, Blockscout, BrightID, DAOStar, DAppNode, EAS, Human Passport (previously Gitcoin Passport), L2Beat, and OSO.

Fundraising a $50K+ pool is realistic. Even without hard commitments yet, the pattern of support is established and a themed round creates a natural opportunity for co-funding.

If relevant based on choice of capital allocation methodology, individual donors will likely mirror past patterns: thousands of small contributions can be expected, particularly if the tools funded include free APIs, dashboards, or analytics libraries that benefit the community.

Success Measurement & Reflection

What specific outcomes will show success within 6 months? How will we measure genuine impact beyond just activity metrics? What will make the Ethereum community genuinely glad we funded this domain long-term?

6-month Success Criteria:

- Tangible Deliverables: High-performing established projects release updates, dashboards, or APIs improving data accessibility and reliability.

- New Tools Emerge: As an example, the grant leads to the development of an “Ethereum Data Commons” or a multi-chain explorer with real-time insights across L2s.

- User Adoption & Community Growth: Tools and libraries see usage spikes.

Genuine Impact Metrics:

To make open data a flagship strength of Ethereum, we propose a layered approach to measurement, where each tier builds on the one below, starting simple, encouraging diverse methods, and engaging deep research over time.

| Metric Tier | Purpose | Approach | Examples |

|---|---|---|---|

| Baseline Metrics | Establish a minimum bar of relevance and activity across all verticals | Start Simple: Begin with common, easy-to-capture indicators to ensure coverage without overcomplication | Active users, GitHub activity, protocol integrations |

| Vertical-Specific Metrics | Capture local impact within a given sub-category | Embrace Competing Implementations: Allow multiple methodologies to coexist, fostering debate, innovation, and iteration | Validator coverage (MEV dashboards), proposal throughput (governance tools), attestation usage (identity frameworks) |

| Longitudinal Metrics | Assess whether local impact leads to lasting, ecosystem-wide change | Engage the Research Community: Provide open, well-documented datasets that attract analysis, experimentation, and refinement | Governance proposal adoption of metrics, funding ROI tracking, open usage & citation of dashboards/APIs |

Satisfaction Test:

Ethereum users will be glad this domain was funded if:

- Open tooling adoption: Increase in the share of ecosystem analytics and explorer traffic served by open, self-hostable tools

- Transparent decision-making: Majority governance proposals in top 20 DAOs by treasury size cite open, verifiable data sources in their rationale

- Funder confidence: Funders report improvement in confidence scores in post-grant surveys, citing clearer data on project impact and health

- Digital public goods outcomes: Funded open data tools show measurable improvements in contributor retention, protocol usage, and governance efficiency

We’ve seen the sparks of this in past rounds and experiments: community-funded tools helping win retroactive rewards, analytics improving how we allocate millions in public goods funding, and grassroots data projects influencing major decisions. The next step is to strengthen and expand these efforts.

Domain Information

- Is this a GG24 Domain Proposal? Yes

Note: While the Data Tooling Atlas provides a broad map of opportunities, the initial funding round will likely focus on a narrower set of priorities rather than attempting to cover the full Atlas. The emphasis should be on use cases that: (a) Deliver impact across more than one of the three core opportunities (e.g., funding flow tools that advance both transparent OSS funding and institutional legitimacy), and (b) Align with areas where there is already interest and deployable capital from funders.

-

Domain Experts: TBD. Final selection will prioritize individuals across the cross-section of:

- Technical Leaders: Core developers, Layer-2 engineers, maintainers of open-source infrastructure.

- Data Practitioners: Builders or researchers with experience in blockchain analytics, governance tooling, MEV monitoring, open standards.

- Governance Leaders: Active contributors in DAOs, funding committees, protocol governance with a track record of transparent decision-making.

- Ecosystem Generalists: People with cross-domain perspective, able to evaluate proposals for strategic alignment and long-term impact.

-

Mechanisms: Metrics-Enabled Expert Stakeholder Retro Funding (see footnote)

-

Sub-Rounds: For the first iteration, this will take the form of one unified round, with thematic categories drawn from the Atlas’s verticals. If the approach proves successful, the best-case scenario is to evolve into multiple, more targeted sub-rounds in future grant cycles, each focused on specific opportunities.

Footnote: Metrics-Enabled, Expert Stakeholder-Led Retro Funding

Drawn from GG23 and other retro funding pilots (Optimism RF4, Filecoin RetroPGF 1), this model combines:

- Metrics-Enabled: Baseline and domain-specific metrics inform initial algorithmic allocations.

- Expert-Led: Domain specialists adjust allocations with context on data quality, security, or roadmap fit.

- Retro Funding: Rewards projects with proven impact from prior QF or ecosystem grants, reducing risk and incentivizing results-first delivery.

This model blends verifiable metrics with expert judgment to avoid “manufactured significance” and ensure funding decisions reflect genuine, lasting impact measured by whether open data tools shape governance, inform funding, and boost user trust.