UPDATE: We ratified results on snapshot on May 28th

Hey y’all, our GG20 OSS program round matching results are live here! We’ll have five days for review and feedback then, barring any major issues, will move to ratify on snapshot on May 22nd and payout on May 28th.

Special thanks to @Joel_m, @MathildaDV, @sejalrekhan, @M0nkeyFl0wer, @Jeremy, @meglister, @owocki and @deltajuliet for their eyes, comments, and feedback!

TL;DR

- GG20 saw a number of exciting developments including a $1M OSS matching pool, the election of a grants council, an upgrade to our core protocol, and a deepened partnership with our friends at Arbitrum.

- We implemented a two-pronged sybil resistance strategy. We used a pluralistic variant of QF and we used Passport’s model based detection system. We did not do any closed-source silencing of sybils/donors. Instead, we’re solely relying on our mechanism and Gitcoin Passport.

- Discussion will be open for five days before moving to snapshot on May 22nd.

GG20 Overview

GG20 Overview

Every round sees new developments. Some of the most exciting in GG20 included:

- Returning to our Open Source Software roots, doubling the number of rounds, projects, and matching funding available since GG19.

- Initiating Community Rounds Governance, electing a council to allocate matching funds for community-led QF rounds. Read the retro and see the proposal to extend the council’s term.

- Upgrading our core protocol to enable more permissionless building, going from allo v1 to allo v2, with GG20 being the first rounds to run on our new protocol!

- Providing the same sybil resistance tooling we use for our rounds to every community using Grants Stack by creating a cluster-matching calculator and integrating with Passport’s model based detection.

- Expanding our collections feature, enabling more people to add their own grant collections.

- Seeing the proliferation of side-apps and tooling, following our modular, unix philosophy. GG20 launches included GG Wrapped, Grants Scan, IDriss cross-chain donations on Twitter, gitcoindonordata.xyz, and a QF calculator for round managers.

- Consolidating all of our program rounds onto Arbitrum, partnering with Arbitrum and Thrive Coin to expand the matching pool and incentives for contributors and grantees.

Key Metrics:

Overall

- 11 Total Rounds

- $1.647M Matching Pool

- $633,431.29 Crowdfunded

- 35,109 Unique Donors

- 629 Projects

- More data here

OSS Program

- 4 Program Rounds

- $1.0M Matching Pool

- $484,487.00 Crowdfunded

- 28, 393 Unique Donors

- 327 Projects

Round and Results Calculation Details

Round and Results Calculation Details

Before GG20 began, we proposed a two-pronged sybil resistance strategy. To recap it briefly, we would continue to use COCM (Connection-Oriented Cluster Matching) as we had in GG19 and we would additionally introduce passport’s model-based detection system.

As explained in the post, and the paper which introduced this mechanism to the world, COCM is much less vulnerable to Sybil Attacks than ordinary QF because it reduces the matching of donors who look similar.

In addition, testing Passport’s model-based detection system (PMBDS) on GG19 donor data yielded greater sybil resistance than the stamp-based system without any of the user friction. When used together, we believe these two tools produce the most sybil-resistant results we’ve ever had.

We also continue the precedent we began in GG19 of not doing any black-box squelching of donor accounts. All of the code we used to calculate results is available on GitHub. Turning off squelching has allowed us to increase transparency of matching result calculations and reduced our time to payouts. In addition, it enabled us to scale the same sybil resistance methods we use to our partners through integrations with Grants Stack.

Recap of COCM: Connection-Oriented Cluster Matching

Quadratic Funding empowers a decentralized network to prioritize the public goods that need funding most. In doing so, it amplifies the voices of those with less money, ensuring bigger crowds receive more matching funds than larger wallets. However, it can be exploited by sybils or colluding groups who align their funding choices to unfairly influence matching fund distribution.

COCM is one of the tools we use to address this issue. It identifies projects with the most diverse bases of support and increases their matching funds. It offers a bridging bonus for projects that find common ground across different groups, rewarding cross-tribal support and broad reach. In this way, COCM values not only the number of voters supporting a project but also the diversity of tribes supporting it.

Recently, Joel upgraded the mechanism further with a Markov chain approach, which assesses the likelihood of a user’s connection to a project based on intermediate connections. In experiments, this provided more sybil resistance by a large margin.

As an unintended side effect, COCM also tends to distribute funding away from top projects and toward the long tail.

Passport’s Model Based Detection System

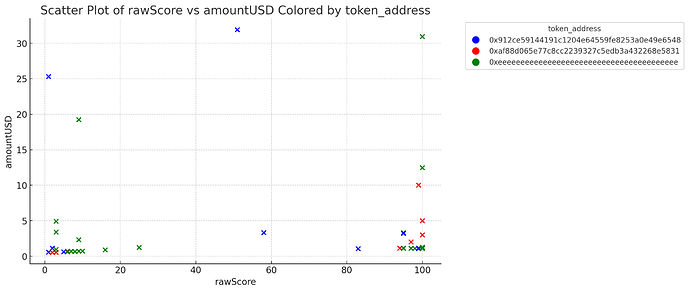

The Gitcoin Passport team has recently introduced a Model-Based Detection System that analyzes the on-chain history of addresses, comparing them to known human and sybil addresses. The model assigns each address a probability of being a genuine human user.

In GG20, this model detected 42.39% of participating wallets as very likely belonging to sybils, and these wallets were excluded from matching. The unmatched wallets accounted for 22.55% of all crowdfunded dollars.

While the model isn’t perfect and sometimes mistakes new users or those with few mainnet transactions for sybils, the team is continuously improving the dataset and expanding the model to L2s. Overall, it effectively reduced the impact of sybil accounts on matching results.

Project Spotlight

Project Spotlight

Here are the top five projects by total matching funding and per voter matching funding for each of our four rounds (gleaned from the overall matching results). The “total matching” list highlights those who received the most support overall, while the “matching per voter” list showcases those who received the most diverse support, benefiting from the use of COCM. The projects on this list are the ones with the most diverse bases of support, regardless of the size of the base.

Developer Tooling & Libraries - Top 5 by Total Matching

| Rank | Project | Matching Funds |

|---|---|---|

| 1 | ethers.js | $29,637.50 |

| 2 | OpenZeppelin Contracts | $23,281.82 |

| 3 | Blockscout Block Explorer - Decentralized, Open-Source, Transparent Block Explorer for All Chains | $17,741.54 |

| 4 | Swiss-Knife.xyz | $16,518.84 |

| 5 | Wagmi | $16,243.91 |

Developer Tooling & Libraries - Top 5 by Matching per Voter

| Rank | Project | Matching Per Voter (Avg) |

|---|---|---|

| 1 | Swiss-Knife.xyz | $83.43 |

| 2 | Viem | $64.28 |

| 3 | Ape Framework | $60.40 |

| 4 | ethui | $60.34 |

| 5 | Pentacle | $57.51 |

Hackathon Alumni - Top 5 by Total Matching

| Rank | Project | Matching Funds |

|---|---|---|

| 1 | NFC wallet | $10,000.00 |

| 2 | WalletX A Gasless Smart Wallet | $7,592.87 |

| 3 | Fluidpay | $7,228.21 |

| 4 | RejuvenateAI | $6,121.22 |

| 5 | Mosaic: Earn rewards for using your favorite web3 apps and protocols | $3,801.02 |

Hackathon Alumni - Top 5 by Matching per Voter

| Rank | Project | Matching Per Voter (Avg) |

|---|---|---|

| 1 | hashlists | $69.02 |

| 2 | Gnomish | $66.79 |

| 3 | DeStealth | $63.23 |

| 4 | Mosaic: Earn rewards for using your favorite web3 apps and protocols | $60.33 |

| 5 | Margari | $60.23 |

Web3 Infrastructure - Top 5 by Total Matching

| Rank | Project | Matching Funds |

|---|---|---|

| 1 | DefiLlama | $30,000.00 |

| 2 | L2BEAT | $25,206.67 |

| 3 | Umbra | $17,319.34 |

| 4 | Dappnode | $16,165.74 |

| 5 | Ethereum Attestation Service (EAS) | $16,022.61 |

Web3 Infrastructure - Top 5 by Matching per Voter

| Rank | Project | Matching Per Voter (Avg) |

|---|---|---|

| 1 | Ethereum on ARM | $14.65 |

| 2 | The Tor Project | $14.55 |

| 3 | Lighthouse by Sigma Prime | $14.41 |

| 4 | eth.limo | $10.69 |

| 5 | libp2p General | $10.63 |

| HM | Lodestar | $10.58 |

dApps & Apps - Top 5 by Total Matching

| Rank | Project | Matching Funds |

|---|---|---|

| 1 | JediSwap | $15,000.00 |

| 2 | IDriss - A more usable web3 for everyone | $15,000.00 |

| 3 | Hey.xyz (formerly Lenster) | $15,000.00 |

| 4 | Revoke.cash | $15,000.00 |

| 5 | Event Horizon | $12,098.09 |

dApps & Apps - Top 5 Matching per Voter

| Rank | Project | Matching Per Voter (Avg) |

|---|---|---|

| 1 | rotki | $27.93 |

| 2 | Karma GAP | $26.87 |

| 3 | Giveth | $25.94 |

| 4 | Funding the Commons | $22.78 |

| 5 | Glo Dollar | $22.06 |

Code of Conduct

As a reminder to all projects, quid pro quo is explicitly against our agreement. Providing an incentive or reward for individuals to donate to specific projects can affect your ability to participate in future rounds. If you see someone engaging in this type of behavior, please let us know.

Next Steps

We plan to distribute the matching by May 28th, after the results are ratified through governance. We are leaving five days of discussion on this post, and barring any major problems or issues found with these results, will proceed to a Snapshot vote that is open for five days.

It’s worth noting that GG19 pre-approved the matching fund to be paid out before results were posted. This is a strong precedent and, if the community agrees, we could pass a policy that matching payouts for all future rounds not require ratification.

We are also hosting an internal retro on May 20th and will publish further results and learnings. And as always, a detailed blog post will be published on the day that payouts are distributed.

We’re also always looking for direct feedback from the community on which improvements would make GG21 even better. Please don’t hesitate to let us know!