Round Description

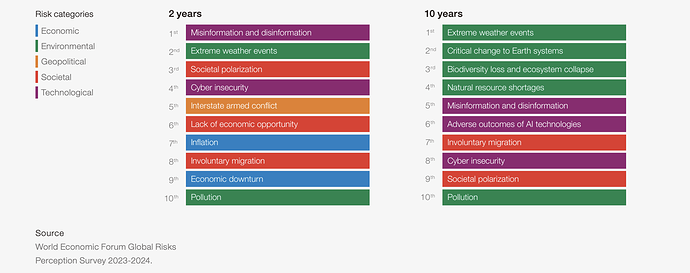

The Climate Coordination Network believes that Climate Action is the ultimate public good. Why? Because climate change increasingly impacts everyone worldwide. Our mission is to accelerate community-supported climate solutions on a global scale, catalyzing diverse forms of climate action to create a sustainable and equitable future for all.

The GG20 Climate Solutions Round provides strategic grant distribution to support global climate solutions projects.

Round Objectives

- To empower climate projects that are dedicated to reducing greenhouse gas emissions and serve as core infrastructure for Web3 climate solutions.

- To build community and accelerate coordination to help grow the greater public goods for climate solutions worldwide.

- To test and improve impact metrics tools that enable climate projects to showcase their real-world impact for more effective and streamlined measurement and evaluation.

Target Audience and Participation

The Climate Solutions Round focuses on supporting early-stage projects within the following 9 categories of climate solutions:

- Renewable Energy

- Oracles & dMRV

- Carbon Accounting

- Climate Activism/Education

- Nature-Based Solutions

- Ocean-Based Solutions

- Climate Adaptation/Climate Resilience

- Supply Chain Solutions

- Built Environment/Transportation

Climate Solution Round Implementation

The Climate Coordination Network (CCN) is a dedicated team of six people from around the world who have served for the past two years on the Gitcoin Climate Advisory team and have managed the decentralized climate round since GG19. The team is familiar with Gitcoin Grants round setup and management, and we divide up the many tasks based on skill set and availability. To set up the GG20 Climate Solutions round, we reviewed the eligibility criteria, updated the CCN Climate Solutions Portal, started communications and outreach, created the new Climate Solutions Metrics Garden and updated grantee resources such as onboarding videos and review process documentation.

Our main challenges for GG20 involved our Climate Grantee review process. Most of our projects are making IRL impact, which is difficult to evaluate as it tends to be qualitative vs. quantitative. We strive to improve this process each round and for GG20, we utilized Checker (a new tool built by Gitcoin) to help with the lengthy process of reviewing 173 diverse grantee applications. Unlike OSS rounds, Checkers AI features didn’t really help us review climate projects. In the future, the AI model could be trained to provide useful feedback but for now, it was not effective. In past rounds, we used spreadsheet tools for the evaluation process. Checker provided a nice interface that gathered the information needed to complete our reviews. We hope to see something like Checker integrated into Grants Stack, as the approvals and rejections are still manual. We experienced technical issues with Grants Stack which did not allow us to export our grantee contact information, which caused a breakdown in communication with our grantees for most of the round.

Going forward, we are discussing a new process that will streamline, simplify, and clarify climate reviews by requiring dMRV for certain types of projects and impact reporting using tools such as Hypercerts and KarmaGAP. We will require all of our grantees to use KarmaGAP to provide 2-3 milestones they will accomplish with the funding received during GG20. This requirement will enable grantees to showcase their impact and significantly reduce our time during the review process in future rounds.

The Climate Solutions Round’s unique feature/approach is that we cultivate a supportive community that results in ongoing productive relationships and collaborations. During our review of grant proposals from projects that have been in multiple rounds, we found clear examples of grantees helping each other and working together to accomplish goals and address challenges. For example, as we transition to clearer requirements for impact metrics, many grantees are working with a Silvi Protocol, a long-time trusted dMRV project with an amazing web3 tech stack. Another example of climate grantees supporting each other is Helpers Social Development Foundation, a community-led NGO in Nigeria that is developing solar-powered community centers in partnership with The Solar Foundation and is also working to pilot a IoT smart metering device built by Switch Electric (also a grantee based in Nigeria).

Outcomes and Statistics

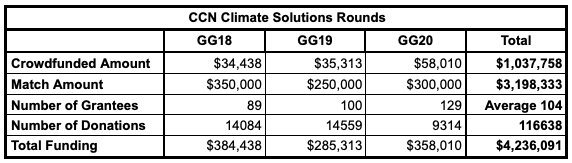

- Report card

- 129 Climate Solutions Grantees

- Total crowdfunding: $58k

- 9,314 contributions

- 2,262 unique donors

- Average contribution: $4.08

Summary of the past three rounds shows growth round over round.

Round Matching Results

After the round, we evaluated the matching estimates using several different methods: COCM, QF, and Pairwise. We then had a long internal debate about the best way to calculate the results. While most, if not all, other rounds followed Gitcoins’ lead and used COCM, we found it was not a good fit for our community. COCM looks at the interconnection between donors and their donation behavior and rewards projects with diverse donors who contribute to a variety of other projects and penalizes those that have donors who only contributed to one project. COCM produces a fairer outcome in many rounds and helps with sybil defense.

The issue we found is that the climate round is a closely connected community. Most donors contribute to many projects in the round which we love to see. We are proud of the coordination we see within the community, but the issue we saw was the round results were flattened when using the COCM calculations. The projects that had received the most support and signal from the community would not receive a fair share of the matching pool. In some cases this was 60-70% less than they would receive using the QF calculations.

If we had moved forward with COCM, we would have disincentivized some of our top grantees who had worked so hard in marketing during the round. Because of this, we decided to use the standard QF calculations.

The round results can be found here.

Impact Assessment

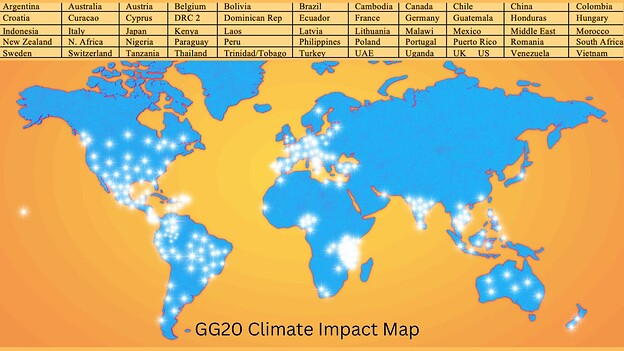

In sum, the GG20 CCN Climate Solutions round impacted 129 climate solutions worldwide (see Climate Impact Map) reducing GHGe, building core infrastructure and—perhaps most importantly—facilitating cooperation and coordination to accelerate climate action. This round, we introduced the Climate Solution Metrics Garden and encouraged Climate Grantees to start utilizing metrics tools such as KarmaGAP and Hypercerts to showcase their impact and set the stage for impact tools, which will be required for participation in future rounds.

Impact Map for GG20

Participant Feedback

“The @climate_program is critical. We cannot overstate its importance, through the bear & the bull, this program has provided necessary resources to projects leveraging Web3 for solving real world, real hard problems” — Irthu, Atlantis

“Silvi would not be here without the support of Gitcoin and the CCN Climate Solutions Rounds. The climate rounds help support our mission to build tools that empower community driven reforestation around the world.” — Djimo Serodio, founder, Silvi Protocol

“Yes so grateful! Thanks CCN & Gitcoin. This funding is crucial for our ongoing operations and growth.” – Isaiah, co-founder Litter Token

Lessons Learned and Areas for Improvements

- Utilizing Checker helped for reviewing grants in terms of format and user interface, however, it would be extremely helpful to be able to use the AI to help us in the next round and have Checker integrated into Manager.

- We need to improve and streamline our review process and are currently working on creating the CCN Project Grader to facilitate this goal.

- We have outlined and tested a new process for dispute resolutions and will be refining this process for GG21.

- We’ll work on updating the Eligibility Criteria of the Climate Round based on learning from this round.

A big thank you goes out to Momus Collective, Gitcoin, Arbitrum, ThankARB and Celo Public Goods for your support funding our matching pool!

A Note from the Celo Public Goods team

The preliminary results are in for the Celo Climate bonus round! You can check them out here.

For the payout process, we are first asking that accepted projects visit the new Celo Public Goods Community Grants Karma GAP dashboard to review the Celo-related claims made in your applications and to add specific Milestones or Updates related to your project’s development with Celo. For an excellent example, take a look at Kokonut Network’s page. Projects that effectively provide these updates may also increase their eligibility and reward potential for future Celo rounds coming soon!

A heartfelt thank you to CCN and the entire Climate and ReFi community for your continuous support and dedication. We look forward to seeing the incredible progress you will make with the support of these grants and are excited for the opportunities ahead.

Closing Thoughts

CCN greatly appreciated the opportunity to participate as a Gitcoin Community Round for GG20 and we look forward to mutually beneficial partnerships and collaborations in the future.