(Thanks to @ccerv1 for reviewing this post and providing feedback)

Assembly Theory x Onchain Capital Allocation

A powerful approach to Allo design space exploration

TL;DR

- Assembly Theory explains how complexity (and innovation) emerges by combining simpler components through incremental steps.

- Systematic Exploration of Onchain Capital Allocation Design Space: Treat web3 funding infra as a modular system. Map its components, test combinations, and systematically assemble powerful new solutions.

- Networked Exploration for Allo Community: Foster a pluralistic, purposeful, network-driven approach to exploring the capital allocation design space, leveraging decentralized collaboration and iterative experimentation to uncover high-potential assemblies of funding mechanisms.

Introduction

What if the key to unlocking innovation lies not in creating something entirely new, but in how we piece together what already exists? Assembly Theory provides a powerful lens to understand how complexity arises—whether in nature, technology, or ideas—by focusing on how systems are built from smaller, reusable components over time.

This perspective is especially relevant to the modularity of Web3 (“money legos”), the rapidly evolving tools for funding public goods, and even the creative freedom of Minecraft. In this post, we’ll explore how Assembly Theory can illuminate new possibilities for Onchain Capital Allocation, and inspire the Allo community to systematically map and expand this emerging design space.

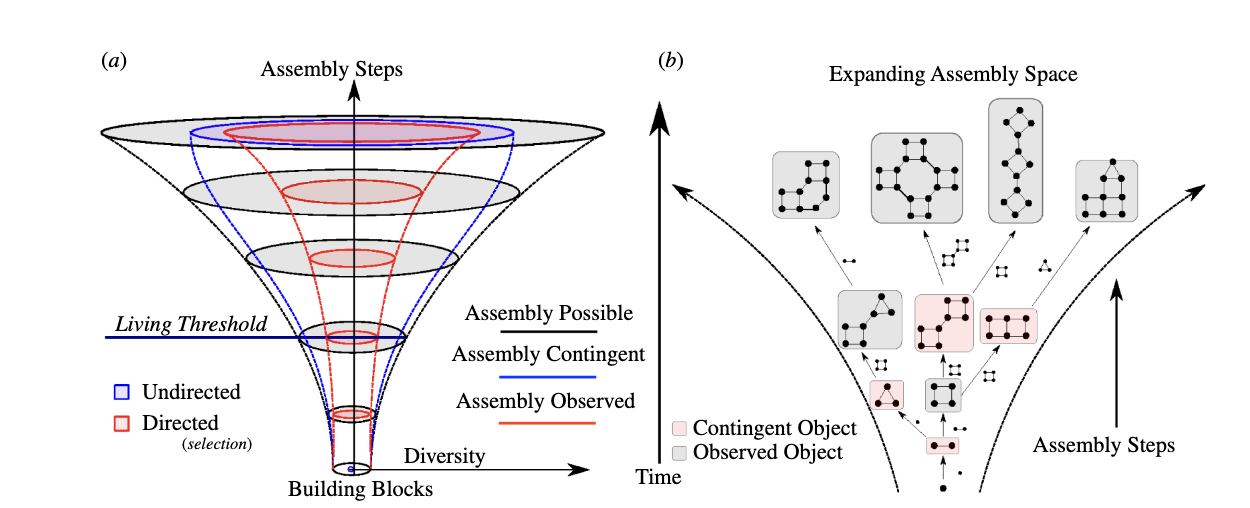

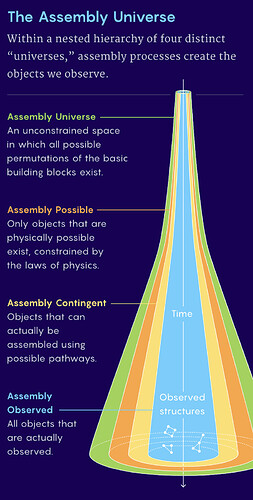

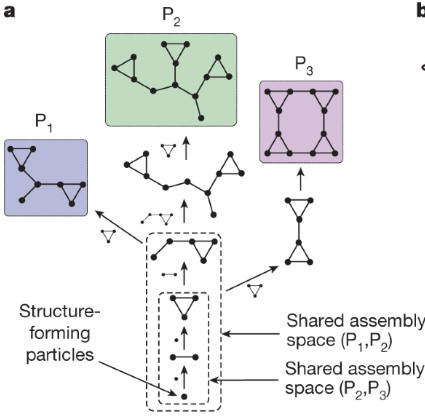

Assembly Theory: The Building Blocks of Complexity

Assembly theory posits that complex systems emerge from simpler components combined through a series of steps. This framework can describe natural phenomena (like the evolution of biological organisms), engineered systems (like software or blockchain protocols), and even conceptual systems like public goods funding mechanisms or onchain capital allocation systems.

Assembly theory is a corollary of Gall’s Law - which states that successful complex systems evolve from simple, functional ones, while complex systems designed from scratch often fail. It emphasizes starting with simplicity and iteratively building complexity to ensure adaptability and success.

In Assembly Theory, composability is a superpower because it allows complex systems to emerge from simpler building blocks, enabling the creation of highly sophisticated structures through iterative combination and reuse. By leveraging composability, components can be assembled, disassembled, and reassembled in novel ways, dramatically increasing the diversity and adaptability of outcomes without requiring a linear increase in complexity. This principle mirrors evolutionary processes in nature and underpins innovation in areas like software development, modular design, and synthetic biology, where small, interoperable units can yield vast creative potential.

Purposeful Evolution

One key to assembly theory is understanding that complexity is not random; it emerges through purposeful or guided processes, constrained by physical, logical, or social rules. It’s a perspective that emphasizes the power of incremental, modular growth.

Assembly theory places guided and purposeful processes at the heart of how complexity emerges. These processes are not random but intentional, following specific pathways that are shaped by rules, goals, or constraints within a system. Whether driven by natural selection, human decision-making, or computational algorithms, guided processes channel growth and organization toward functional outcomes, ensuring that complexity develops in meaningful and efficient ways.

Purposeful processes work by leveraging feedback, iteration, and adaptation. In biological systems, for example, evolution is a guided process where mutations are filtered through the lens of survival and reproduction. Similarly, in human systems, innovation often progresses through deliberate experimentation, learning, and refinement, where each iteration builds on past successes and failures. These processes are purposeful because they are directed toward achieving specific outcomes, whether optimizing fitness in nature or creating useful technologies in society.

A key feature of guided processes is their reliance on constraints as a form of guidance. Constraints—whether physical, logical, or social—act like a blueprint, channeling the process of assembly into pathways that maximize efficiency and minimize waste. For instance, in technological development, design constraints shape how systems evolve, ensuring that each component serves a clear purpose within the larger structure. This guidance prevents the system from becoming overly complex or unstable, allowing it to scale in a modular, controlled way.

Ultimately, guided and purposeful processes are what differentiate complexity from randomness. They imbue systems with direction and intent, ensuring that their growth is not only structured but also meaningful. By understanding how these processes operate, we can better appreciate the intricate systems around us and design more effective strategies for fostering complexity in fields ranging from biology to engineering and beyond.

What do advancements look like in assembly theory?

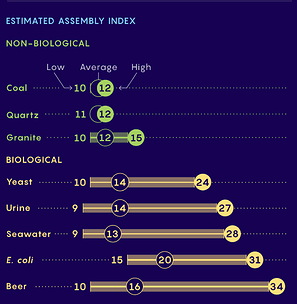

The complexity index in assembly theory is a measure of the minimum number of sequential steps required to assemble a specific object or system. It reflects the object’s history of guided processes. Higher values indicate more intricate and layered assembly pathways.

This index helps quantify complexity by capturing how much effort or design is encoded in the object’s structure, distinguishing between random arrangements and those formed through purposeful, incremental construction.

The complexity index can be used as a proxy for “advancedness” in evolution. The more complex an object is, the more advanced its evolution from more simple elements. This suggests a longer and more intricate evolutionary history, where functional traits or features have been built upon over time.

But “advanced” does not necessarily imply superiority or fitness in an absolute sense. In evolutionary terms, advancedness simply reflects the cumulative processes that have contributed to the object’s current state. For instance, a complex molecule, organism, or artifact may have a high complexity index due to a rich assembly history, but its fitness or success depends on how well it functions within its specific environment or context. Thus, while the complexity index is a useful tool for gauging evolutionary progression, it should be interpreted as a marker of historical assembly depth rather than a direct measure of fitness or utility.

While complexity is not inherently a measure of fitness or utility, it can sometimes align with them. For example, in biology, the complexity of the human brain enhances adaptability and problem-solving, directly contributing to survival and reproduction. Similarly, in technology, complex devices like smartphones integrate diverse functions, making their complexity a source of utility.

However, complexity can also reduce fitness or utility. Bacteria, though simple, often outcompete complex organisms in extreme or changing environments due to their efficiency and adaptability. Overly complex systems, whether in technology or biology, can become inefficient or vulnerable. Thus, complexity’s relationship to fitness and utility depends on context.

Web3 and the “Money Legos” Analogy

Web3 exemplifies modularity with its “money legos” concept. Protocols like Uniswap, Aave, and MakerDAO operate as composable parts—tools that developers can assemble in novel ways to create new financial products, decentralized applications, and governance systems. This composability has fueled innovation in decentralized finance (DeFi), allowing for rapid iteration and experimentation.

Much like how Lego bricks encourage endless creations, Web3 protocols form a design space ripe for exploration. Developers can combine these foundational tools to craft systems tailored to specific use cases, from yield aggregators to quadratic funding platforms.

In my most recent Greenpill podcast episode with Vitalik on web3 public goods funding, he talks a bit about how QF, Retro Funding, Futarchy, etc are just legos that can be assembled into different configurations. The podcast comes out 12/12 here.

Onchain Capital Allocation as a Design Space

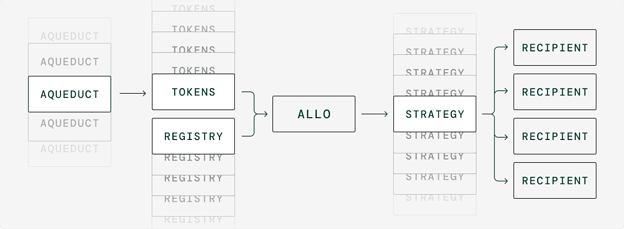

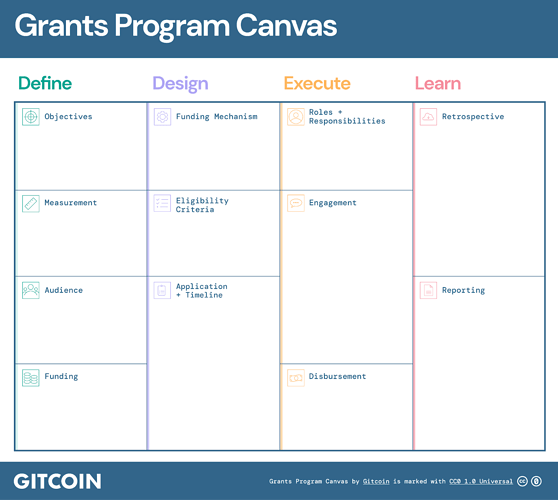

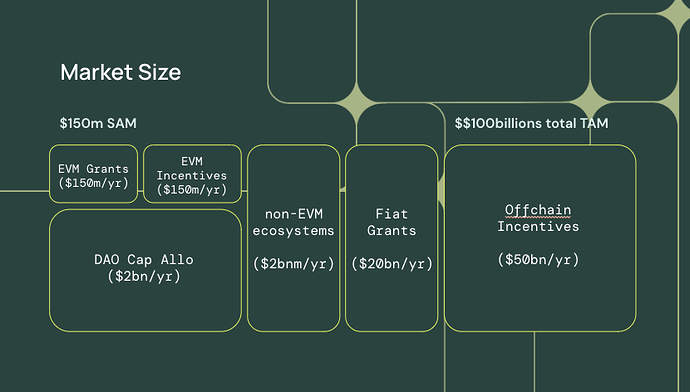

Onchain Capital Allocation (including Public goods funding in Web3)—whether through Gitcoin Grants, retroactive public goods funding, or Allo protocol—represents another rich design space. Each tool or mechanism is a component in a larger assembly, capable of being recombined and optimized for different contexts.

Assembly theory helps frame this design space as a process of incremental discovery… Which happens when you combine smaller composable parts with each other:

- What happens when you combine module A with module B? Mechanism X with Mechanism Y?

- What happens when you mix quadratic voting with staking incentives?

- How might retroactive public goods funding integrate with DAO-based decision-making?

By viewing Onchain Capital Allocation widgets as a dynamic, assemble-able system, we open up opportunities for experimentation and evolution, much like how biological systems adapt to their environments.

One way to start might be to map out all of the different types of components in the possibility space… As we’ve done visually below.

Another way to start might be to map out all of the permutations of program design possibilities. As we’ve done visually below.

From there, we can start to reason about the most powerful pieces to combine with each other. We can assemble them in any order we’d like.

A naive approach to exploring this design space might be a single team that combines components brute force, one by one, and empirically tests whether the resulting circuit is useful.

Perhaps this team could iterate from there. To become more effective, it could iterate quicker, or add a priori thinking, partnerships with proven partners, subscribe to cutting edge research, or evolve other strategies. Each strategy is effectively augmenting its design function, allowing that team to become more effective. A team of live players is likely to find new innovative ways to explore the design space, which will compound the longer they stay alive.

Recall that a key to assembly theory is understanding that complexity is not random; it emerges through purposeful or guided processes. It is through an explicit examination of the processes through which we create this complexity that we create a more purposeful exploration of the design space.

An even more enlightened approach might ask how do we explore this design space in a systematic, pluralistic, way - that is guided by networked based processing by multiple agents, much like how a decentralized organism, like a slime mold, explores the space around it.

I think there is a powerful but counterintuitive example of an ecosystem that is doing this.

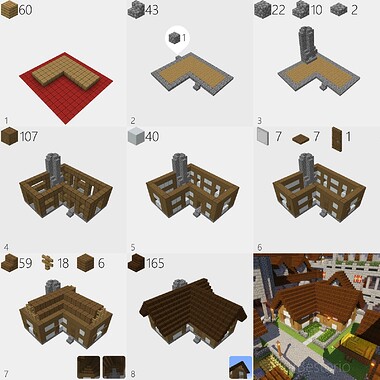

Minecraft: Mining the Design Space

To further illustrate the power of assembly, consider Minecraft. The game thrives on its simplicity, composability, and modularity, providing players with basic blocks and rules. Yet, these elements allow for an infinite range of creations, from castles to computers.

Minecraft succeeds because it makes the design space accessible, fun, and social. Players explore possibilities not out of obligation but because the game invites creativity in an engaging environment. The game demonstrates that when you create a platform where exploration is encouraged, participants will mine the design space, discovering possibilities that even the creators of the game couldn’t have imagined.

Minecraft is renowned for its boundless creativity, enabling players to build an astonishing array of structures and experiences. From simple homes and sprawling cities to intricately designed replicas of real-world landmarks, the game fosters architectural diversity on a global scale. Beyond physical structures, players craft immersive role-playing adventures, functioning calculators, and even working computers using Redstone mechanics. Minecraft’s versatility extends to educational projects, art installations, and collaborative efforts that blur the lines between gaming and creativity, making it a unique platform for self-expression and innovation.

If Minecraft’s modularity unlocks infinite creative potential, what lessons can we apply to Onchain Capital Allocation systems?

Lessons for Onchain Capital Allocation

What if we (1) recognized the power of assembly theory in Onchain Capital Allocation (2) applied Minecraft’s ethos to the design space of Onchain Capital Allocation? By drawing from assembly theory, we can design tools and platforms that systematically invite Allo/Gitcoin’s thousands of followers to explore this space. Here’s how:

- Create Accessible Building Blocks: Simplify the process of combining mechanisms. Just as Minecraft makes placing blocks easy, Onchain Capital Allocation tools should offer intuitive, modular components. Just as it’s easy to assemble Minecraft blocks, make it easy to assemble Allo blocks.

- Foster a Fun, Profitable, and Social Environment: Encourage collaboration, gamify experimentation, and celebrate contributions, much like hackathons, community grant programs, or accelerators, do.

- Encourage Play, Discovery, & Iterative Improvement: Provide environments where ideas can be tested, recombined, and improved, with clear feedback loops to guide development.

- Highlight Assembly Milestones: Share examples of successful assemblies, showcasing how others have used the building blocks in creative ways.

We can systematically explore the design space of Onchain Capital Allocationg, just as players explore Minecraft or developers tinker with DeFi protocols. This perspective doesn’t just encourage innovation; it creates the conditions for a Cambrian explosion of possibilities in Onchain Capital Allocation.

Conclusion: Assembling the Future of Onchain Capital Allocation

Assembly theory invites us to see systems not as static entities but as dynamic assemblages of parts that evolve over time. Web3’s money legos, Onchain Capital Allocation mechanisms, and even playful platforms like Minecraft exemplify the power of modularity and exploration.

By applying these principles, the Allo/Gitcoin community has the opportunity to create, lead, and participate in an evolutionary exploration of the Onchain Capital Allocation design space. By embracing modularity, collaboration, and iteration, we can uncover new ways to fund, sustain, and grow the Onchain Capital Allocation systems that underpin our collective future.

Let’s assemble something extraordinary.