One thing I think is interesting about web3 is how it’s evolving how work is done. In closed source organizations, we’d keep a product vision under wraps until the day the Minimum Viable Product (MVP) launched. But that is not so in web3 where Open Source is the norm + we work in public. We are surrounded by tens or hundreds of contributors in discord that work on a DAO’s mission (as opposed to for a company), which blurs the lines between internal an external.

I want to talk about what an evolution I’m observing in myself and in others that is a result of this change in working norms.

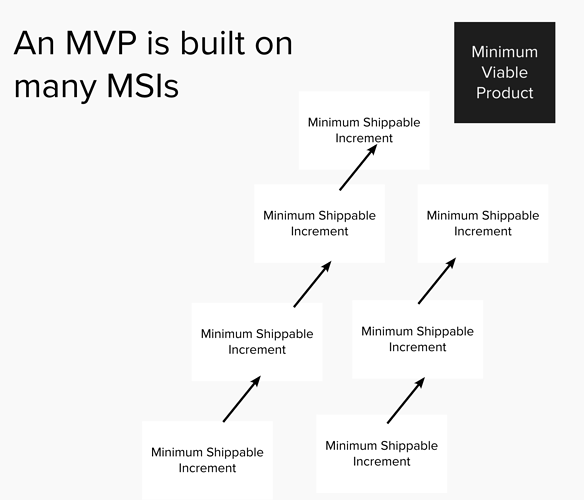

As a member of a web3 or a DAO contributor, you may be working on a team that is aiming to ship a Minimum Viable Product of some external-facing deliverable.

When you’re working in public, the guts of the work you do to ship that MVP is in public already (or at least semi-public, depending upon what your DAOs communication structures look like).

What does the work that you ship to your coworkers or followers (the people who follow your “work in public” career), which leads up to the minimum viable product for an external release look like?

I think that its a Minimum Shippable Increment (MSI). I have been thinking about the idea of MSIs a lot recently, because I think that Minimum Shippable Increments are what you get when you cross Minimum Viable Product x Working in Public.

A MSI has three criteria

- minimal - scoped down as far as possible

- shippable - comprehensible to stakeholders (the ppl following your work in public)

- increment - earns value towards the MVP which will ship publicly.

Why do MSIs matter?

All of the work that contributors do in a DAO is the accumulation of their MSIs.

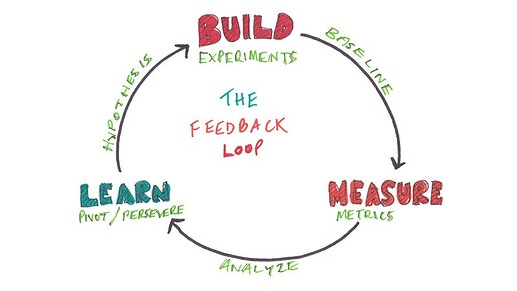

I think that DAOs which successfully design communication structures that allow contributors to (1)find/define their next MSI, (2)execute their MSI, (3) learn from their MSI (4) repeat rapidly & effectively will win.

The MSI Loop

I think we should aggressively lower the barrier to our next MSIs to “safe to try” rather than “do we all agree?”. Having permissionless experimentation allows bottoms up innovation to be a part of our culture. It’s how we’ll win.

I also think we should be comfortable with experiments failing. A minimum shippable increment that fails to achieve it’s hypothesis is not an embarassment - it is an opportunity to learn (provided no harm is caused of course).

I think that each person should have an understanding of why they’re working on their next MSI, and should understand the learnings that come from it. I’ve seen a lot of projects go awry because the person doing the work didnt understand the “why” and so couldnt make tradeoffs. Or was not connected to the learnings from it.

I think that a relentless focus on MSIs paired with a sense of urgency/bias towards action is an extremely powerful combination for DAO contributors to rapidly learn, adapt, & create outcomes, a competitive advantage in a rapidly evolving industry.