Summary

This GCP proposes to support a machine learning competition to predict funding received by projects in GG23. The objective is an understanding of the metrics and models that are most likely to give a similar allocation outcome as the human judgment mechanism of quadratic funding.

For this, we are requesting a total of 20,000 GTC (~$11k as of 29th January), half of which is given as prizes to winning contestants while the other half covers pay for judges and operating expenses.

Abstract

Deep Funding has a market of AI models competing to make predictions aligning as closely as possible with human judgment. Plugging in Deep Funding for GG23 will be an experiment in replicating human judgment in QF with AI, create an information surfacing tool for voters, and more generally involve the machine learning community with gitcoin rounds.

There are 3 primary components :

-

A list of all competing projects in GG23

-

A competition where contestants provide weights to each project indicating the relative funding they will receive

-

After funding amounts are announced, finalizing a leaderboard showing which ML models best predicted funding to projects in the round

Motivation

With its move to becoming multi-mechanism, especially retrofunding and futarchy, the Gitcoin ecosystem needs a capability boost in its understanding of metrics and assessment of predictions. This competition seeks to do just that by asking top performing model submitters to make public the metrics considered in their predictions on relative funding between projects in GG23.

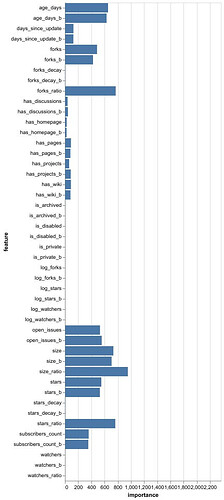

Previously, Deep Funding demonstrated the impact of these contests by asking models to predict funding received by projects in Gitcoin, Optimism, Open Collective and Octant rounds from 2019 to 2024. You can see the submissions in the contest here; to give one example, the current top rated model by @davidgasquez used the following weights to predict how much funding a project would receive

By expanding Deep Funding to GG23, we can gauge the impact of AI models in predicting not just past funding but also upcoming round results. If the gap between model predictions & eventual funding allocation is narrow, we can eventually utilize these models to determine how much funding a project should receive in a round without them having to even take part in it

This collaboration will result in;

-

Submissions on Gitcoin’s forum by machine learning experts on what parameters fed into their models could best predict results of a GG23 round before it is concluded

-

An indicator to voters on which projects are predicted to be perform well in a GG23 round

-

Provide concrete data on the gap between the winning models’ predictions of funding received by projects in the round and the actual amount it ends up receiving in GG23.

Specifications

- Creation of the GG23 predictive funding contest on cryptopond.xyz

-

Receive the Project List: Upload a list of all participating projects to cryptopond.

-

Submit Your Predictions: For each project, forecast the fraction of the total funding it will receive. All predicted weights must sum to 1.

-

Compare to Reality: When the round completes and actual payouts are finalized, we’ll calculate the actual weight for each project. For example, if Project A receives $10,000 out of a $100,000 round, its weight is 0.10.

-

Scoring: We’ll use RMSE (root mean squared error) to evaluate how close each submission is to the real, final allocation. The model with the lowest RMSE wins.

- Submission write-up by model submitters on Gitcoin forum

To be considered for prizes, model submitters will need to submit a write-up similar to the earlier contest (example). Participants are encouraged to be visual in their communication, show weights given to their models, share their juypter notebooks or code used in the submissions and explain the performance parameters of their model.

- A jury will look at the comments and model performance and select the prize winners

We will use willing jury members from the mini-contest to also judge models in GG23. The committee for the mini-contest is composed of @vbuterin (Vitalik Buterin) , @Kronosapiens (Daniel Kronovet), @Joel_m (Joel Miller), @ccerv1 (Carl Cervone) and @octopus , with Devansh Mehta as facilitating member.

Those members willing to re-serve for the GG23 competition will comprise the jury.

Milestones

-

Get competition uploaded on cryptopond

-

Finalize the leaderboard of submissions based on how closely their model matches with actual GG23 round allocation

-

Choose winners from among those making a writeup on Gitcoin’s forum

Budget

We are requesting a total of 20,000 GTC for this experiment.

10,000 GTC will be awarded as prizes to winning model submissions.

5000 GTC is kept aside for operational expenses such as getting the contest uploaded to cryptopond, marketing of the initiative to get high quality model submissions and completion of other reporting requirements under this grant

5000 GTC is kept aside for compensation of committee members that are willing to serve (maximum of 1000 GTC per member). Unutilized funds from this bucket will be returned to Gitcoin.

KPIs

-

Schelling point for machine learning models and AI agents: Number of model submissions and high quality comments on the forum from contestants (Quantitative)

-

Performance benchmarking : Measure gap between top model predictions on funding allocations and the actual allocation to projects (Quantitative)

-

Information surfacing tool: Useful to GG23 participants in seeing which projects are predicted to perform well (Qualitative)

special thanks to Nidhi Harihar, @sejalrekhan , @MathildaDV & @owocki for their comments on the draft