One other note about TrustBonus:

Right now it’s framed as a % multiplier on all donations, which after learning more about the problem I think might not be the best long term solution…

Why? After studying Vitaliks On Collusion article , we’ve considered changing the core architecture of this to be a PersonhoodScore which equates to the price of forgery of each identity in the system.

So in this framing, instead of an identity service N giving a TrustBonus % TB, each identity service would give a PS where 1 unit of Personhood Score is equal to $1 Price of Forgery for a new identity mechanism.

What does this accomplish? Well in Vitaliks On Collusion article , we learned that cheaters come in all shapes and forms, from common petty criminal up to Justin Sun to nation-state actors behaving badly. By recognizing this core fact, we are at least able to frame this problem in crypto-economic terms – and make a system cheaper to defend than it is to attack…

In practice this means if a user is not sybil verified or not, their identity has a PersonhoodScore/Price of Forgery of $X – where up to $X in benefit you can assume the identity is valid, and after $X you cannot be sure.

The basic idea here behind PersonhoodScore is that each identity mechanism adds $X to the price of forgery of an identity, and the PersonhoodScore can add/multiply as a user integrates with more identity mechanisms.

EG if Rabbithole.gg were to integrate Gitcoin’s sybil resistence one day, and a user with a Personhood Score of 10 comes to them, they can assume that they can safely give a reward worth $10 or under to that user.

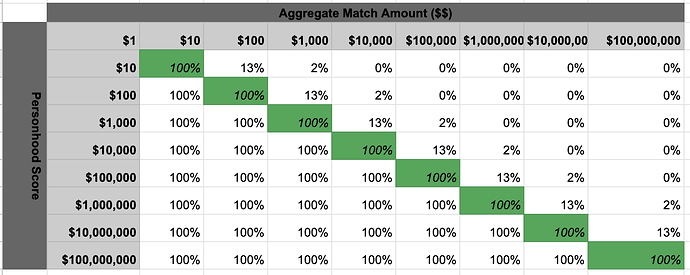

In Gitcoin Grants this is what it would look like in a straw man Gitcoin Grants contribution dampening model which maps a users match amount to their personhood score, ending in a percentage of matching amount that can be safely applied:

In this model,

- a user with a 1000 personhood score who contributes contributions that would generate $100 in matching would get 100% of their contribution matched.

- a user with a 100 personhood score who contributes contributions that would generate $100 in matching would get 100% of their contribution matched.

- a user with a 10 personhood score who contributes contributions that would generate $100 in matching would get 13% of their contribution matched.

- and so on…

While were on the topic of how to govern TrustBonus, I figured this reframe might be useful feedback for the proposer. Open to community feedback as always tho!